The Augmented World Expo (AWE) is the leading extended reality (XR) industry conference, with over 6,000 attendees, 300 exhibitors and nearly 600 speakers. The 15th annual AWE wrapped last week in Long Beach, CA. I had the opportunity to reprise my role as main stage host, serve as a panelist on How Generative AI Can Make XR Creation More Accessible (see episode 33), and give a talk on Building for the Future Of Human Cognition. I also attended several talks and walked the expo floor, trying numerous demos.

Across these experiences, I kept returning to the question, What we can learn from XR’s history to create desirable spatial computing futures? This theme of learning from XR’s history was set in AWE co-founder Ori Inbar’s opening keynote: “If we want spatial computing to one day replace 2D computing, we all have to become history buffs”.

In this week’s episode, I’ll share key milestones in XR history, and three takeaways on what this history can teach us about building for the future. I’m defining XR as the umbrella term for all immersive technologies, including augmented reality (AR), virtual reality (VR), and mixed reality (MR).

A Brief History of XR

Sensorama (1962)

I’m starting the clock on XR history where AWE did, with cinematographer Mort Heilig’s Sensorama - a machine that is one of the earliest known examples of immersive, multi-sensory technology.

This arcade-style theater cabinet would simulate senses beyond sight and sound. This approach was inspired by Heilig’s fascination with the human sensory system and perceptual illusions, sparked by the 1952 film, This is Cinerama. (Cinerama was designed to introduce the widescreen process, broadening the aspect ratio to involve the viewer's peripheral vision). Back to Sensorama…

The viewer would watch one of six short films adapted for this invention. The experience included stereo speakers, a stereoscopic 3D display, fans, smell generators and a vibrating chair.

In the XR History Museum on the AWE Expo floor, I had the opportunity to experience a VR-recreation of the Sensorama in the Meta Quest 3 headset, courtesy of The Immersive Archive Project USC Mobile & Environmental Media Lab. The first film I experienced was Heilig’s “Motorcycle”, depicting riding a motorcycle on the streets of Brooklyn. One of the folks in the booth held up a small fan and a vaguely rubbery-smelling candle to my face, simulating the wind and smells of the ride. In the next film, A Date with Sabina, I was the person on said date. Shot from a first-person view, “I” passed Sabina a bouquet of flowers in the film, while the person in the booth held a bouquet of flowers under my nose.

Heilig understood we are inherently multisensory beings, and that to dial up immersion, you should engage more senses. Relatedly, by having the wind and candle smell match the audio-visual experience, Heilig reduced sensory conflict - the mismatch of afferent signals from visual, vestibular, and somatic sensors that is widely accepted to explain motion sickness in VR.

The Ultimate Display (1965)

Another important milestone in XR history - and a recurring mention at AWE - was Dr. Ivan Sutherland’s paper, The Ultimate Display (1965). Sutherland described a display concept that could simulate reality to the point where one could not tell the difference from actual reality.

This ultimate display would include: a virtual world viewed through a head-mounted display (HMD) and appeared realistic through augmented 3D sound and tactile feedback; the ability for people to interact with objects in the virtual world in a realistic way, and computer hardware to create the virtual world and maintain it in real time. In Sutherland’s words, “A chair displayed in such a room would be good enough to sit in…with appropriate programming such a display could literally be the Wonderland into which Alice walked.” Sutherland would go on to be remembered as a leader in computer graphics leader, cementing 3-dimensional interactions with computers, including with 3D virtual models.

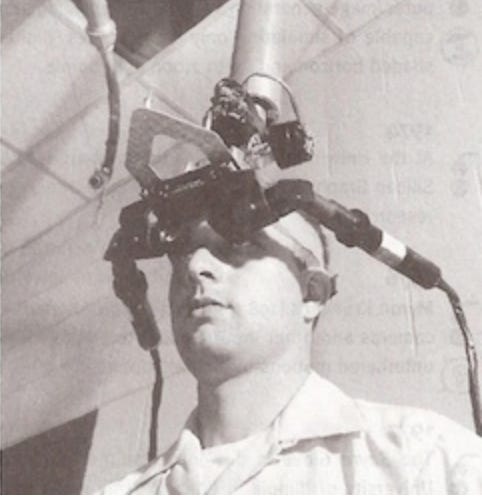

In 1968, Sutherland and his student Bob Sproull created what’s widely called, “The Sword of Damocles” - the first VR/AR HMD that was connected to a computer and not a camera.

It strikes me that Sutherland described and then built technology that rendered a new layer of reality onto which we can extend our cognition. This is the same layer I discussed in my AWE talk, one that also yields a key differentiator of XR from other technology: natural, embodied interactions, given that we expect to interact with this digital layer similarly to how we interact with the physical world.

This brings me to a provocation inspired by Ori’s keynote: What would a potential future look like, in which we pursued a spatial computing paradigm rather than a 2D graphical user interface (GUI) paradigm?

The 2D GUI feels like a stop gap due to technological constraints of the time. Computers of the 1960s had limited processing power and display capabilities, which were more suited to simple, flat interfaces rather than complex 3D environments. The same year as the rather terrifying-looking Sword of Damocles was developed (1968), Douglas Engelbart and his team gave the Mother of All Demos, showing now-familiar GUI paradigms such as the computer mouse, word processing, and dynamic file linking. The GUI matched the technology available at the time, but came at the cost of adding an additional layer of abstraction for the user.

The Super Cockpit

The last major XR milestone we’ll discuss is the Super Cockpit by Dr. Tom Furness (often referred to as the “grandfather of VR”), who presented a keynote at AWE. In 1966, Tom - then an Air Force officer - was tasked to couple a human to a fighter jet, via cockpit design. As aircraft became more advanced, so did the cockpit. He identified challenges such as the increased cognitive load of having to switch attention between what’s happening inside versus outside the aircraft, and the complexity of having to interact with 50 computers (i.e., displays in the aircraft). Constrained by being unable to change the design of the physical cockpit, Tom turned his focus to increasing bandwidth to and from the pilot’s brain - in other words, another way to project information.

Tom developed a visually-coupled system in 1971, in which information was projected on a helmet visor. This would be hooked up to sensors on the aircraft, and aim those sensors based where you’re looking. This provided, with other information projected, a way to “see” through the cockpit.

Added aircraft advancement led to further complexity. In his AWE talk, Tom described a pilot’s experience in F-16 aircraft, ca. 1978: “300 switches, 75 displays, 11 switches on the control stick, 9 switches on the throttle, pulling Gs at the boundary of consciousness and being shot at at the same time”. Talk about high cognitive load! This challenge fueled his development of a wearable Super Cockpit, with information displaying information from all these sources fused into a single “gestalt” as an instantly-understandable image.

The Super Cockpit provided us with several lessons. First, people can quickly assimilate information when it’s presented spatially. Doing so “awakens spatial memory”, as Tom described - you’re looking at a place rather than a picture. This coupling of immersion and 3D interactivity created presence, with applications including and beyond defense (e.g., education, healthcare training).

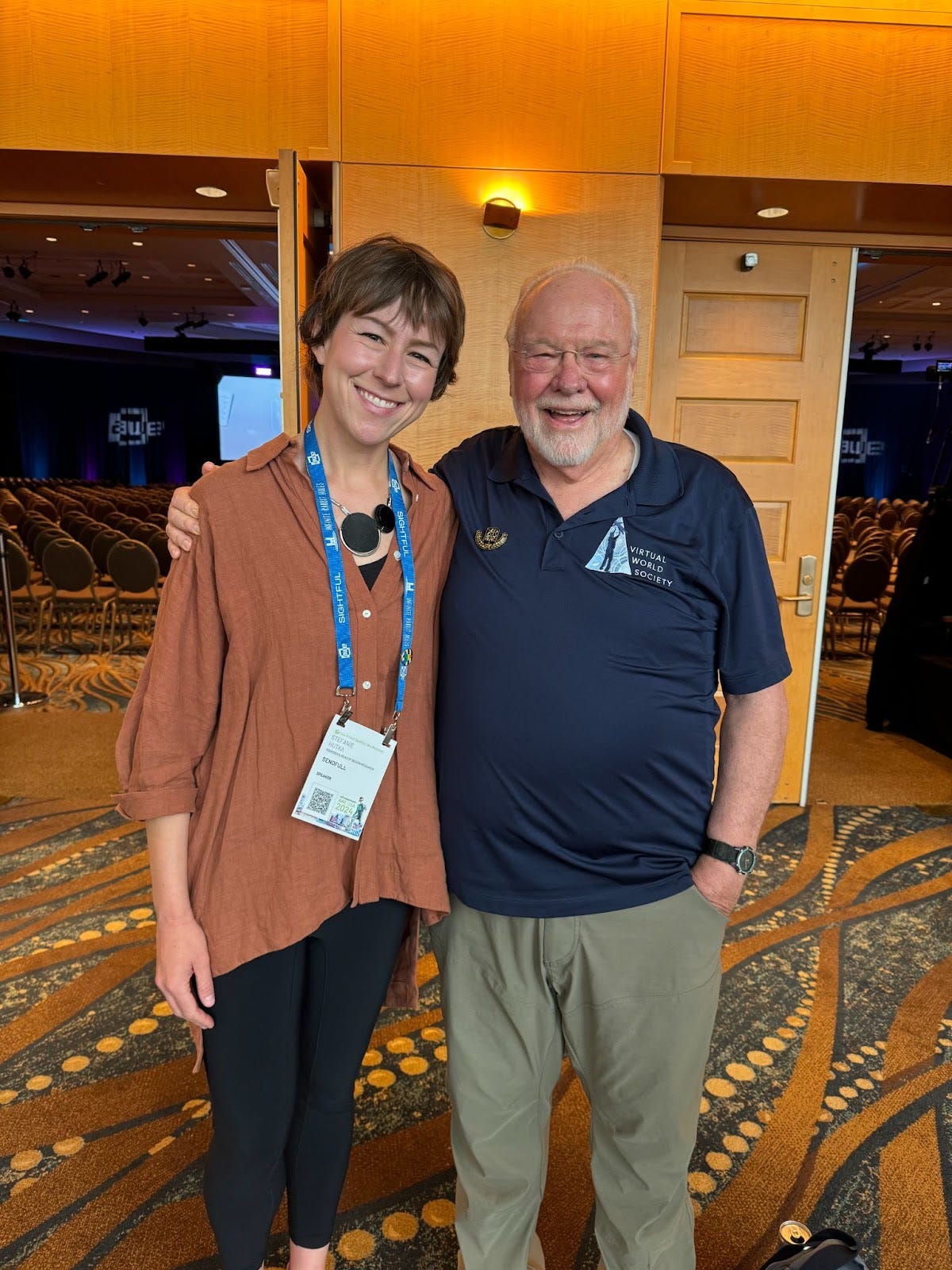

At AWE, Tom announced the Super Cockpit for the Mind initiative, part of the Virtual World Society that he founded. There are two aspects of the Super Cockpit for the Mind:

The outward-facing, to help us understand how to best interface with the Metaverse (think: how to not build the dystopian, cognitive-bandwidth-eating XR future depicted in Keiichi Matsuda’s Hyper-reality).

The inward-facing, to help us expand our own capabilities (e.g., “see” beyond our limits of awareness/what isn’t brought into consciousness. One example from Tom’s keynote: inconspicuous displays using stimulation of the far peripheral retina).

As a design researcher thinking about the future(s) of human cognition, I’m particularly excited by this Super Cockpit for the Mind approach to building for XR.

Takeaways

The mission of this year’s AWE, per Ori’s keynote, was “Learn from XR’s history to create the future”. Looking across three milestones for XR, we learn three takeaways to help us build more desirable spatial computing futures (at least from a purely human-computer interaction perspective):

From Mort Heilig’s Sensorama: Increase immersion through engaging as many senses as possible; the more convergent senses, the less potential for sensory conflict (and motion sickness).

From Ivan Sutherland’s Ultimate Display: An ideal display creates a reality layer onto which we can extend our cognition. We expect to interact with this digital layer similarly to how we interact with the physical world, creating the potential for more natural interactions.

From Tom Furness’s Super Cockpit: Spatially-presented information helps people quickly assimilate and learn information, presenting significant opportunities for use cases like education and training. Just as Tom increased cognitive bandwidth by spatially visualizing fighter jet display information, the next wave of XR should seek to help us increase cognitive bandwidth as we navigate digital worlds and expand our capabilities beyond the limits of our limits of awareness.

For my past writings on XR history, check out my episode on what we can learn about XR design from 50 years of aviation display human factors research. For more coverage of AWE, check out this Forbes summary by Charlie Fink, and last week’s episode, Live from AWE. For more on the history of VR, check out the Virtual Reality Society.

Human-Computer Interaction News

Researchers use large language models to help robots navigate: If we want a robot to do our laundry for us, it will need to combine your instructions with its visual observations to determine the steps it should take to complete the task. Research from MIT offer a new technique that can plan a trajectory for a robot using only language-based inputs. While it can't outperform vision-based approaches, it could be useful in settings that lack visual data to use for training. Read the paper here.

TestParty raises $4M to help automate the coding for accessible websites: TestParty, a AI-powered software compliance company, announced a $4 million seed round on June 25. The company seeks to make websites more accessible by automating testing, remediation, training, and code monitoring to bring websites into compliance with accessibility standards. Today, nearly all of the world’s most popular website homepages are not compliant with the Web Content Accessibility Guidelines.

Roblox’s road to 4D generative AI: Morgan McGuire, Roblox's Chief Scientist, describes how the company is building toward 4D generative AI, going beyond single 3D objects to dynamic interactions. This technology could help simplify more engaging virtual content creation, reducing the barrier to entry for more creators.

Is your team working on AR/VR solutions? Sendfull can help you test hypotheses to build useful, desirable experiences. Reach out at hello@sendfull.com

That’s a wrap 🌯 . More human-computer interaction news from Sendfull next week.