ep. 32: Embodied, by design

8 min read

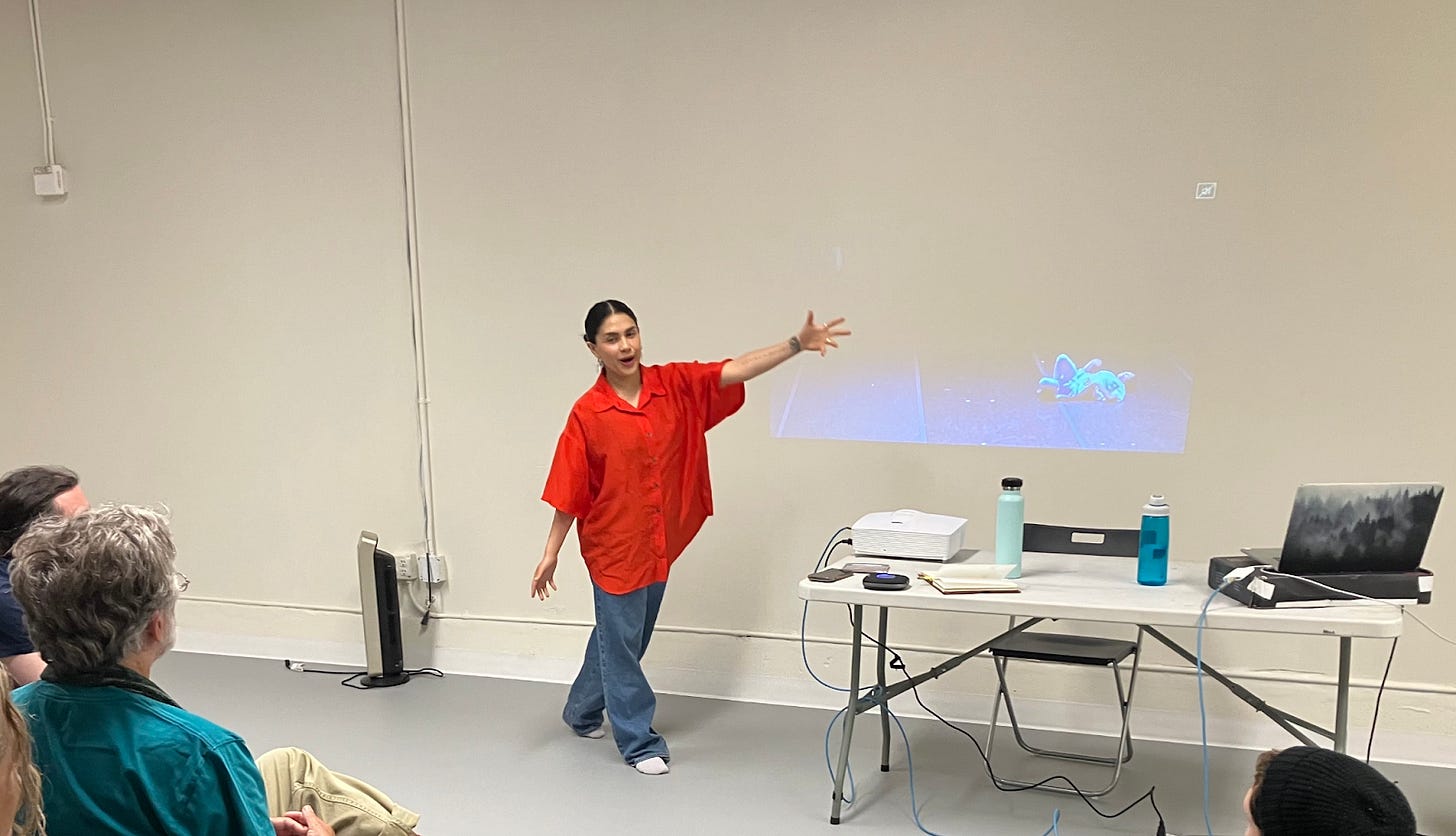

On May 29, I presented at Y-Exchange, an intersectional platform featuring artists at the overlap of technology, science and performing arts, co-presented by Kinetech Arts, Djerassi Resident Artists Program and ODC. My talk, Embodied, by Design discussed how extending human cognition with immersive technology starts with understanding embodied cognition, the theory that cognitive processes are deeply rooted in the body's interactions with the physical world. I shared the stage with Gizeh Muñiz Vengel, movement artist, who discussed themes of embodiment through the lens of dance performance.

In this week’s episode, I share a summary of my talk, learnings from Gizeh’s presentation, and takeaways for building immersive technology solutions built on augmented and virtual reality (AR/VR).

Embodied cognition is fundamental to human experience

Embodied cognition theory dictates that cognition is not solely a brain-bound phenomenon. It is distributed across our entire bodily experience. For example, as we catch a ball, our eyes detect elements like shape and color, engaging our sensory system. The perceptual system recognizes these signals as a ball coming towards you, and your cognitive system uses this information to decide what action to take. The motor system sends signals to your muscles to catch the ball. All of this occurs in the backdrop of a given environment, which also influences these systems (e.g., is the ground slippery or dry? Is the sun impeding your view of the ball?) As the hand moves, continuous sensory feedback is provided, updating the other systems.

I wove in an excerpt on violin in my talk to illustrate a key point about the relationship between embodied cognition and tools. Musical instruments are tools that musicians shape through practice and technique. Over time, these instruments also shape the musicians, influencing all of the systems we just discussed. This echoes a quote by Canadian philosopher and media theorist Marshall McLuhan: “We shape our tools and therefore our tools shape us”. Technology is both a product of human creativity and a transformative force that influences and reshapes our behavior and cognition.

“We shape our tools and therefore our tools shape us.” - Marshall McLuhan

Understanding embodied cognition can help us build more useful immersive technology

Embodied cognition is a key aspect of the immersive technology user experience. Using AR/VR technologies, we are able to engage with digital content that has spatial depth, while maintaining situational awareness of our physical environment (more on this in episode 10). Our interactions with this spatial content are embodied: while not exactly like interacting with an object in the physical world, which provides tactile information like texture and mass, digital objects are closer to this experience than how we interface with flat, 2D content and graphical user interfaces on a screen.

These embodied interactions are a key superpower of immersive technologies - a unique strength inherent to the medium. They particularly lend themselves well to enhancing learning (from academia to corporate training). Imagine learning about the layers of the earth from a 3D model, where you can spin around the model and interact with different, labelled layers, versus seeing a flat, 2D diagram.

Let’s connect these concepts with cognitive neuroscience research. Our brains represent space across several different “zones”:

personal space: space occupied by our body

peripersonal space: space immediately around our body that the brain encodes as perceived reaching and grasping distance

extrapersonal space: space beyond beyond reaching and grasping distance

We can remap these zones via the use of physical objects (aka tools). For example, using a stick to interact with our environment, space previously considered extrapersonal space is remapped to peripersonal space. Taking this one step further, we have the rubber hand illusion, a perceptual illusion in which participants perceive a physical, rubber model hand as part of their own body. This phenomenon can be replicated in virtual reality and MR using a digital model hand instead of a physical one.

Furthermore, the more we engage multiple sensory modalities, the greater the potential for a pleasing immersive experience. These multi-sensory experiences must be designed in a way that the experience is coherent with both other digital elements and the physical environment (more on this here, where I discuss one of my favorite immersive experiences, Tónandi in Magic Leap).

In short, spatial experiences as afforded by AR/VR technology can create a new layer of reality onto which we can map - and therefore, extend - our cognition. Effectively leveraging multiple senses amplifies these effects.

Art and technology co-evolve, revealing new ways to extend human cognition

The power to create this new layer of reality onto which we can extend our cognition is not to be taken lightly. We must carefully consider the effects of the experiences we enable and create with AR/VR, both within a given experience (e.g., keeping people aware of their surroundings) and at a broader societal level (e.g., designing to keep people present, and inviting us to intentionally engage with the technology). This is one of the areas where we can learn from artists.

During my time at Adobe, I had the pleasure of working with numerous artists exploring the possibilities of immersive technology design in the Adobe AR Residency. Artists explored different uses of space and vantage points (e.g., Zach Lieberman and Molmol Kuo), combined illustration, music and animation (e.g., Heather Cathleen Dunaway Smith) and explored the concepts of art creation and ownership (e.g., John Orion Young). Artists also can vividly illustrate potential futures we don’t want to work towards - for instance, Designer Keiichi Matsuda - guiding us about what not to do in his speculative design fiction video, hyper-reality. This guidance will only become more critical as immersive technology and artificial intelligence continue to overlap.

Sharing the stage with Gizeh and hearing her describe how she approaches movement practice, I was struck by how we were examining embodiment from different perspectives. Through movement, her performances are about being fully present in movement, carefully observing how experience emerges. Technology, for example, in the form of lighting, supports her physical performance, but is not the focal point.

Takeaways

As we design new immersive experiences, we have significant power to create a new layer of reality onto which we can extend our cognition. Let’s remember to:

Design to keep people present: At a human-computer interaction level, this is about designing interfaces to increase situational awareness and reduce cognitive load. At the societal level, this is about designing immersive experiences that can enhance our world, while prioritizing engagement with the people and things in our physical environment. For example, people can choose to use AR glasses to visualize a root system in a botanical garden; the experience is secondary to actually experiencing the physical garden.

Leverage the strengths of the medium: Not everything needs to be immersive experience that leverages AR/VR technology. Play to the strengths of the medium (check out this post for guidance). For instance, porting a flat, 2D spreadsheet 1-1 into a VR headset is not adding value to the experience, and make the sheet more challenging to interact with. On the other hand, if the VR experience helps you spatialize datapoints and explore multidimensional data hands-on (e.g., GraphXR), then you are playing to the unique strengths of the medium.

Learn from - and support - artists: When building new technology (e.g., AR/VR, gen AI), we should pay special attention to how artists are pushing the edges of that technology, and invite them into the development process whenever possible. What are they teaching us about the experiences we want - and don’t want - in the future? Check out this list of artists who were using AI in their practice even before ChatGPT.

Upcoming Event

I’m presenting at the 15th annual Augmented World Expo (AWE), the leading extended reality industry conference. I’ll be giving a talk on Building for the Future Of Human Cognition, introducing keynote speakers on Main Stage Day 1, and speaking on a panel for How Generative AI Can Make XR Creation More Accessible. Interested in attending AWE? Use SPKR24D for a 20% discount.

Human-Computer Interaction News

AI Is a Black Box. Anthropic figured out a way to look inside: Anthropic researchers have made significant strides in understanding the inner workings of large language models (LLMs). Researchers have been focused on "mechanistic interpretability," a technique akin to reverse-engineering LLMs to decipher the neural patterns that generate specific outputs. Their breakthrough involved using dictionary learning, a method to identify combinations of artificial neurons that represent distinct concepts (e.g., burritos or biological weapons). This research holds potential for improving AI safety by identifying and controlling harmful features within the models. Other research teams (e.g., DeepMind, Northeastern University) are also working on similar interpretability issues, highlighting a growing community focused on making AI more transparent and safe.

The Spacetop G1 is an AR laptop with no screen: The Spacetop G1, which uses AR glasses instead of a traditional screen, is now available for preorder ($1,900 USD). The system is designed for productivity (e.g., work anywhere with a 100-inch virtual screen). Its lighter weight relative to other extended reality headsets (e.g., Apple Vision Pro) suggests that it’s built for extended use on-the-go. That said, its 50 degree field-of-view will limit the virtual monitor experience. It does allow for connection to peripherals like standalone monitors, suggesting that the system is designed for portability - for instance, work with a real monitor at the office, and then continue work on a plane via the AR glasses.

AI headphones let wearer listen to a single person in a crowd, by looking at them just once: Researchers at the University of Washington have developed proof-of-concept AI-powered headphones that allow users to focus on a single speaker in a noisy environment by looking at them for 3-5 seconds. This "Target Speech Hearing" system then isolates and plays only the enrolled speaker's voice in real time, even as the user and speaker move around. This innovation can provide clearer auditory focus in crowded settings, potentially improving environments like offices, social gatherings, and public spaces where background noise typically disrupts communication. Potential applications include earbuds and hearing aids. Read the research paper here.

Is your team working on AR/VR solutions? Sendfull can help you test hypotheses to build useful, desirable experiences. Reach out at hello@sendfull.com

That’s a wrap 🌯 . More human-computer interaction news from Sendfull next week.