ep.1: Berkeley AI Summit, GPT4-V, Meta Connect, Spatial Translation Framework

Weekly newsletter covering Design Research at the intersection of Spatial Computing and AI.

“Don’t focus on AI, focus on how AI supports the use case” - Barak Turovsky, VP of AI, CISCO

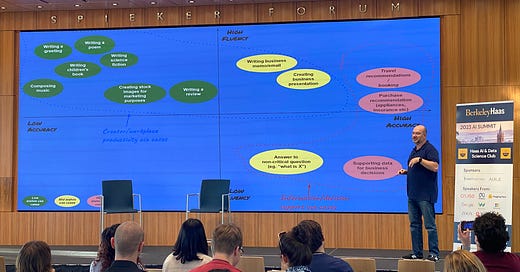

Building AI problem-first versus technology-first was a central theme at Sunday’s Berkeley Haas AI Summit. Featuring speakers like Pieter Abbeel, Barak Turovsky, and Nazneen Rajani, the Summit included panels examining multiple dimensions of AI, such as foundation model development, responsible AI, and AI entrepreneurship and investment. In true design researcher fashion, I created an affinity map to identify key themes.

Affinity map to identify key themes of the AI Summit, bc design research.

The five takeaways

Use the right AI for the job (to be done): We’re building AI tech-first when we should be building customer-problem-first. We also tend to focus on use cases that require high model accuracy - except, in the words of Pieter Abbeel, “we need to start with something more forgiving”. AI is good, but not good enough for most things on its own (see: why you wouldn’t want to take a nap in a Tesla). Lower-stakes use cases where “pretty good” is sufficient is a key opportunity, for example: creator and workplace productivity, like creating stock images for marketing purposes. This theme of “solving the right problem the right way” was also a key criterion for investors in the AI space.

Barak Turovsky plots AI use cases along two axes: fluency - how natural sounding the output is, and accuracy - how correct the output is.

Until we can design AIs that effectively elicit what humans want - design for uncertainty in your model: People generally are bad at coherently expressing what they want. Therefore, a key challenge we face is designing AI products that elicit what its human users really want to do. We can leverage what we know about humans to tackle this challenge - for example, we can better express what we want in the moment when specifying between two given choices. In the meantime, we must design for this uncertainty in true user intent in our foundational models.

Make it easy for a user to immediately understand how good your model is: When you tell a customer that an “AI hallucinates 5% of the time”, it will likely be interpreted as playing Russian roulette with the product (also another reason we should start with forgiving models - see insight #1). Instead, what if we highlighted vetted, true information in the output? This “if it’s blue, it’s true” approach to communicating model accuracy to a user is demonstrated in ChatAIble’s output by Aible AI.

Arijit Sengupta, CEO, Aible AI, describes ChatAIble - “if it’s blue, it’s true” helps users know what model output is accurate versus a hallucination.

Collecting immediate feedback from end users and use that data to improve their output: We’re already seeing the gen-AI version of, “if you’re not paying for it, you’re the product being sold”. Arijit Sengupta argued that, if we don’t collect immediate feedback from end users and use that to conform models to them, we’re effectively commoditizing people instead of empowering them. Immediate feedback can also help people get more value from the model, so this seems like a win-win approach.

We need a framework for developing model evaluation approaches: Evaluating if you’re receiving meaningful, safe, or comparable results from one model versus another is currently highly subjective. “There is no good benchmark to evaluate chatbots”, per Nazneen Rajani, Research Lead at Hugging Face. This seems like a challenge design researchers are well-situated to solve, given our experience measuring and benchmarking user experience quality.

Honorable mention quote:

“Hallucinations are very human behavior.” - Barak Turovsky

Human-Computer Interaction News

Multimodal LLMs build out their perceptual system: OpenAI released a multimodal version of ChatGPT, powered by its LLM GPT-4, to paying subscribers for the first time last week. GPT-4V(ision) “can now see, hear, and speak”. As we build out multimodal LLMs, we need a more natural and intuitive user experience for interacting with AI, driven by multi-modal design principles. I’ve been thinking about this for a while for extended reality user experiences - this is a flip of that script, namely ChatGPT is the user perceiving inputs. I’m following this closely to see how we approximate the brain’s "association cortices” that combine information from multiple senses.

The Design of Everyday (AI) Things: Taking a page from the classic UX book, Design of Everyday Things, Sam Stone, Head of Product at Tome, presents a set of UI/UX principles for building better AI tools, applying the concepts of affordances, feedback and constraints. The resulting conclusions are: Don’t aim for a Hole-In-One, User feedback isn’t free, and Treat chatbot interfaces skeptically.

Generative AI Unleashed: Charting The Enterprise Future: This Forbes article by Mark Minevich describes real-world use cases demonstrating the disruptive potential of enterprise AI. A common theme across use cases is leveraging generative AI’s pattern recognition capabilities to extract valuable insights from large enterprise data repositories, such as helping accelerate the most time-consuming and costly stages of drug discovery. We also get a set of guidelines to build a strategic roadmap for responsible adoption of enterprise AI.

Is AI in the eye of the beholder? A recent MIT paper showed that “the way that AI is presented to society matters, because it changes how AI is experienced”. Authors found that influencing human–AI interaction by priming beliefs about AI can increase perceived trustworthiness, empathy and effectiveness. To quote senior author Pattie Maes, “A lot of people think of AI as only an engineering problem, but the success of AI is also a human factors problem”. The name we give AI and how we talk about it has a major impact on how effective AI systems seem to users.

Apple’s Approach to Immersive VR on Vision Pro is Smarter Than it Seems—And Likely to Stick: The Road to VR article by Ben Lang describes how Apple is baking in the expectation that people don’t want their apps ‘full-screen’ all the time on the Vision Pro. If you don’t want to go full-screen, it’s a conscious opt-in action, rather than opt-out. This is compelling from an HCI perspective because it references existing mental models - how we currently use full-screen computers and phones.

Meta Connect Round-up: We heard the introduction of mixed reality (MR) on Quest 3. This makes it the world’s first mainstream MR headset that blends the physical and digital worlds together - this is significant because it brings MR to a large customer base, helping build mental models for this new medium.

We also heard Boz describe “augments" coming to Quest 3 next year - interactive, spatially aware digital objects that come to life all around you. Importantly, “every time you put on your headset, they’re right where you left them.” For example, you could place life-size artifacts from games like Population One in your living room, just like you would an IRL trophy you wanted to showcase. This helps meet user expectations and makes for more natural interactions, bc we quickly start to ascribe properties of real-world objects to digital objects in MR (which includes objects staying where you put them, aka persistence).

We also learned more about the next-gen Ray-Ban Meta smart glasses, which will integrate with Meta’s AI tools. There’s an exciting potential future here of helping people learn more about what they’re looking at (e.g., recognize landmarks, see real-time information), all while heads-up and hands-free.

Work

Spatial Translation Framework: Spatial computing is going mainstream, with revolutionary mixed reality devices coming from Apple (Vision Pro) and Meta (Quest 3). Product teams will want to bring their experiences to these new environments.

As they do, they will be faced with the following challenges: When should we adapt existing smartphone and laptop experiences to headsets? When we’ve decided to adapt an experience, what specifically should we “spatially translate” onto a headset? Should we consider building a system that uses both smartphone/laptop and headset for inputs and outputs?

To help teams address these challenges, I built the Spatial Translation Framework:

The Spatial Translation Framework helps people understand when, when and how to adapt existing experiences for spatial computing headsets.

We can use it to inform:

When to adapt existing experiences to headsets.

What to build (or keep) on more traditional 2D systems (e.g., laptops and smartphones), versus in a spatial computing system (e.g., mixed reality headset).

How to leverage interoperability between these systems for a more seamless user experience.

Do you have an iOS mobile or tablet app that you’re considering porting to the Vision Pro? We’d love to work with you to apply our Spatial Translation Framework. Reach out at hello@sendfull.com

That’s a wrap 🌯 . More human-computer interaction news from Sendfull next week.