ep.3: AI to Augment Human Capabilities, GenAI Meets AR Glasses, Customer Understanding Toolkit to Build Better Products

7 min read

“We need to design for people who are predictably irrational to interact with models that are unpredictably rational.” - Dr. Niloufar Salehi, UC Berkeley

The BayLearn Machine Learning Symposium, held on October 19 in Oakland, CA, was a gathering of ML scientists from the San Francisco Bay Area, promoting community building and fostering idea exchange. Keynotes included presentations from AI leaders including Professors Fei-Fei Li (Stanford), Percey Liang (Stanford), Christopher Re (Stanford), and Niloufar Salehi (UC Berkeley).

Professor Fei-Fei Li driving home a key point of her talk - AI should augment, not replace, human capabilities.

Three takeaways

Design AI to augment - not replace - human capabilities: Building AI to see what humans don’t see and want to see is key for building AI that plays a positive role in society. This allows AI to augment, rather than replace, human capabilities. For example, people experience change blindness, in which a visual stimulus can change without being noticed by its observer. We’re not talking about subtle changes - people easily miss dramatic differences between two seemingly identical photos and don’t notice a person in a gorilla suit walking through a basketball game. These gaps in human capabilities are key opportunities for AI to be our eyes. Prof. Li highlighted how this is especially salient in healthcare, where medical errors are the third leading cause of death in America, and we’re facing a human labor shortage (e.g., we need 1.2 million new registered nurses in America by 2030 to address the current shortage). That is, we need more eyes to keep patients safe. Many of the 400,000 American deaths due to medical errors could be prevented by using electronic sensors and AI to help medical professionals monitor and treat patients, improving outcomes while respecting privacy. When you’re thinking about your next AI product or feature, consider how might we design this to augment, rather than replace, human capabilities?

Don’t build a robot to open presents - the importance focusing on human needs: As we work towards embodied AI that closes the loop between perception and action (think of the difference between a robot detecting an egg versus not crushing that egg when it picks it up), we’ll have the opportunity to solve for an array of use cases. How do we decide what problems to solve for people with this new tech? Enter design research. In the 2022 “Behavior-1K” survey to learn what household activities people prefer more help with, cleaning tasks made the top three (“clean after wild party” was #1). Among the lowest ranked were “open b-day presents” (#1983), buy a ring (#1989), and play squash (#1990). While it might be a technological feat to build a present-opener robot, you’re going to have a hard time finding product-market fit. Learning about human needs and building your solutions around them is the better path forward.

Design human-AI interactions that guide reliable use: Dr. Niloufar Salehi shared examples from her lab about how we can design AI tools that help people craft better inputs, evaluate outputs, and guide the synthesis of information. For instance, quality estimation models can help physicians decide when to rely on model output. This solution addresses a high-consequence user problem: currently, 17.5% of emergency discharge translations across seven languages do not retain their meaning; Farsi and Armenian have the worst accuracy rates (32.5% and 45%, respectively). Dr. Salehi also shared a sneak peek at Yodeai, her team’s new knowledge synthesis engine that allows a user to guide the creation of large language models (LLMs, which are flexible but unpredictable) and knowledge graphs (KG, which are predictable but static) systems. The result is the ability to organize unstructured data, zoom in and out of your data to gain different perspectives, and getting answers based on your data. I used Yodeai to help distill key takeaways from the ML Symposium for this newsletter, a kind of “ChatGPMe” where I use my own prompts to synthesize information from my own notes. Sign up for Yodeai early access here.

Human-Computer Interaction News

To excel at engineering design, generative AI must learn to innovate: In a new study, MIT engineers trained several AI models on thousands of bicycle frames, sourced from a dataset of full bicycle designs. When asked to design a new bicycle frame, deep generative models (DGMs) generated new frames that mimicked previous designs. They faltered on engineering performance and requirements. In contrast, DGMs specifically designed with engineering-focused goals (not just statistical similarity) produced more innovative, higher-performing frames. This supports that with careful consideration of design requirements, AI models can be an effective design "co-pilot" for engineers, helping them more efficiently create innovative products. To the earlier section, this will hopefully help augment, rather than replace, human capabilities.

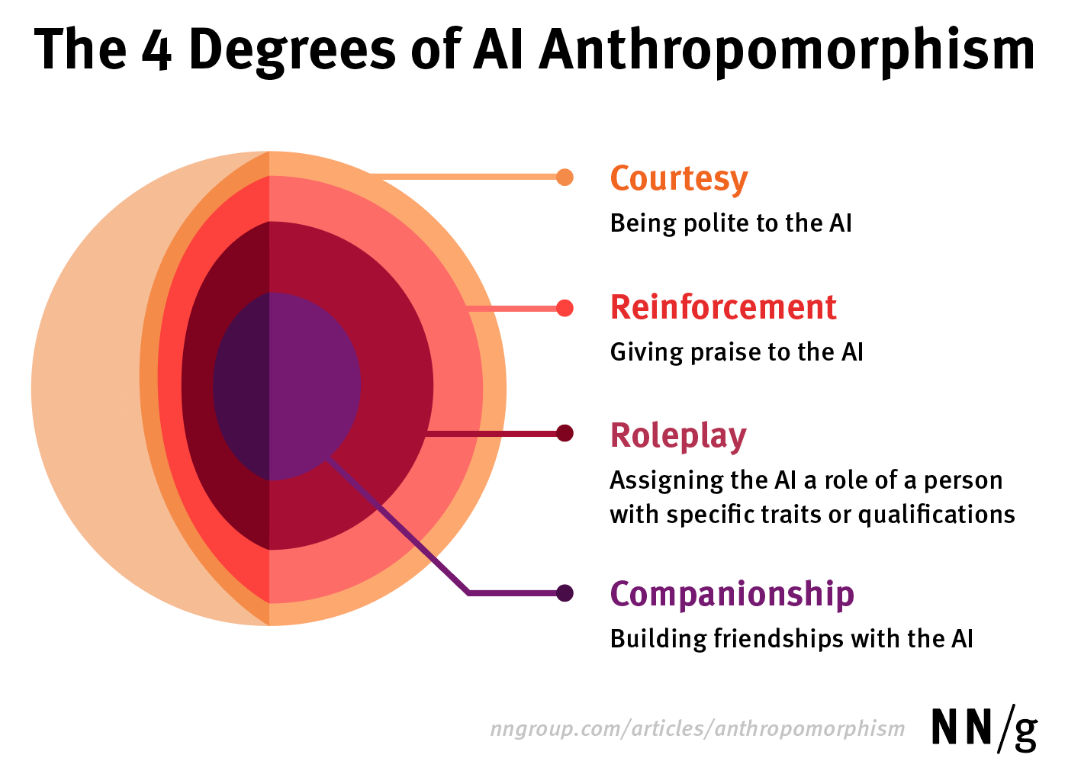

The 4 Degrees of Anthropomorphism of Generative AI: The Nielsen Norman Group released a new framework to help us understand how people attribute human-like qualities (“anthropomorphism”) to generative AI tools. If you’ve ever said “please” or “thank you” to your AI, you’ve engaged in the first degree or AI anthropomorphism - “courtesy”. This reminds me of last week’s experimentation with Character AI, in which different characters (think Billie Eilish or Mario) set the tone for how you engage with the AI. Here, anthropomorphism is already built in by virtue of interacting with the character, which I’d predict is associated with more “level 4 companionship”. This may be another reason why Character AI drives greater user engagement relative to other AI tools, like Chat-GPT.

ChatGPT-4 isn’t ready to take on UX evaluation: Now that you can upload images to ChatGPT-4, you could upload a screenshot of a webpage and ask about user experience (UX) improvements you could make. However, we wouldn’t recommend it. No, that’s not design research bias speaking: in a test to conduct a UX audit of 12 different webpages, the Baymard Institute compared the AI mode’s UX suggestion to those of a qualified UX professional. ChatGPT-4 had a 80% false-positive error rate and a 20% accuracy rate. In short, work with a design researcher to evaluate your UX, unless you want an 80% error rate.

Xreal’s Air 2 glasses put a big screen on your face and ship in the US next month: The Verge describes Xreal’s launch of two new sets of lightweight AR glasses (Xreal Air 2, Xreal Air 2 Pro). The glasses were designed with a strong focus on improving user comfort. Key use cases include large-screen app experiences (e.g., streaming services, gaming). Users can connect the glasses to their smartphones, game consoles and other devices, allowing a user to open an app and see what they’re seeing on an AR screen, like a large external monitor in glasses form factor. The focus on comfort and media consumption suggests you can use them on the go around other people (e.g., public transit). I’ll be curious to see whether the new designs are deemed socially acceptable by users and those around them, as this will be important to adoption. I’m also curious about emerging expectations around how privacy is signaled on smart glasses. That is, though the Xreal Air 2s don’t include cameras that can track or record your surroundings, will they look noticeably different than non-AR glasses in practice? (From my experience with the last generation, they do look different). Will users feel self-conscious because others think might be recording, and how will this affect adoption?

Brilliant Labs raises $3M for generative AI-based AR glasses: Singapore-based Brilliant Labs both raised funding, and introduced a rebranded generative AI app, Noa. The app, previously known as arGPT, was used for real-time translation into English and helping users discern truth from fiction during conversations using ChatGPT. The company also announced the integration of Stability AI, a visual open-source AI model, into its flagship AR device, Monocle. This integration will allow users to create AI-generated imagery from real-time snapshots of their world. Brilliant Labs and funder Wayfarer Foundation are also developing the world’s first ethics framework for head-mounted AI devices. I’m hoping affordances to clearly signal recording to bystanders are high on the list. The ability to have a fact-checker (that sometimes hallucinates) on your head also raises questions - when and how do you signal to others that you’re using this feature? In addition, we’ll see interesting HCI questions emerge around how to help users evaluate genAI output in a glasses form factor, given small screen real estate, the need to maintain situational awareness, and different inputs than a phone or laptop.

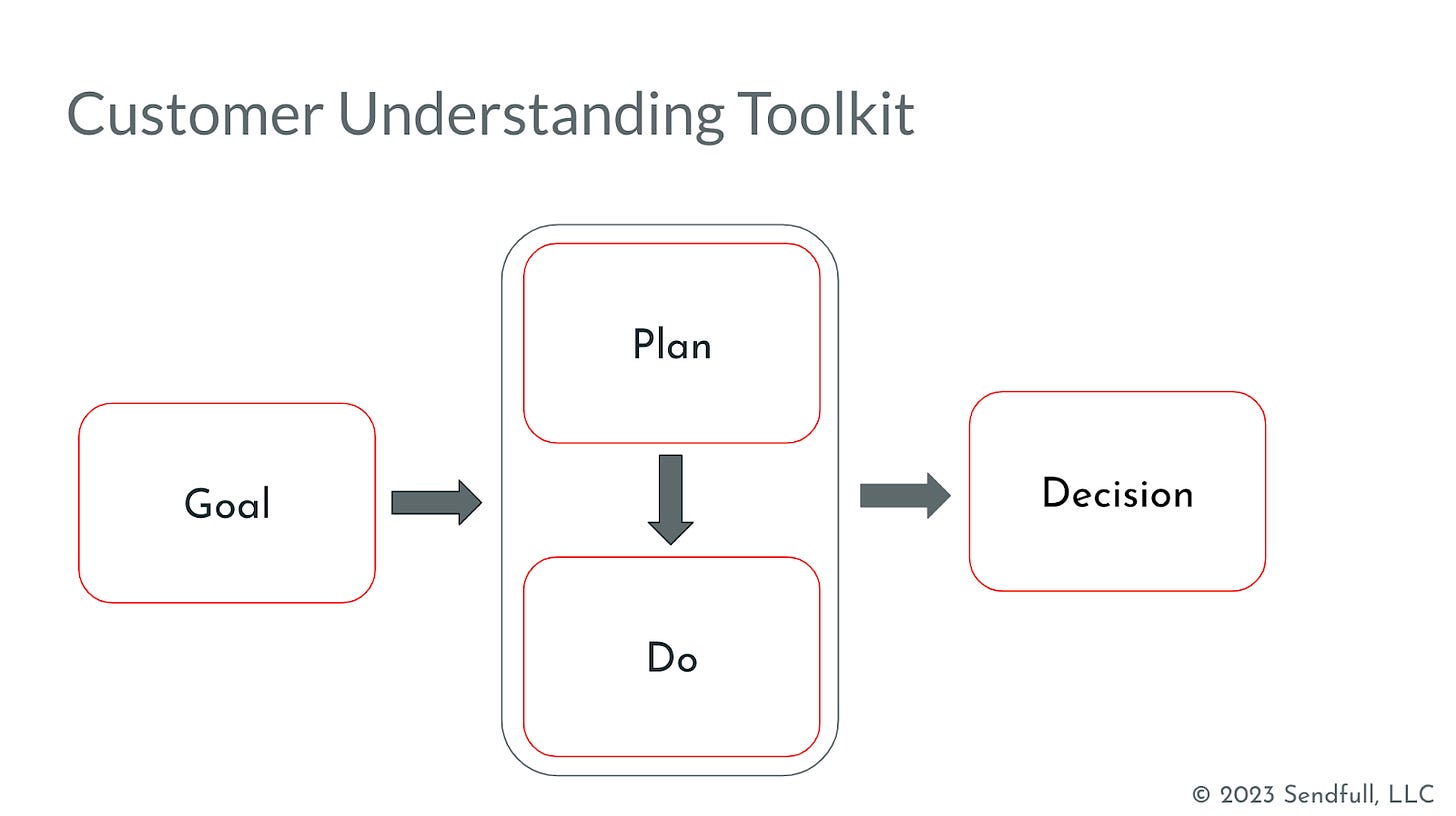

Customer Understanding Toolkit

The Customer Understanding Toolkit is a flexible set of frameworks and approaches for teams to deepen customer understanding. The Toolkit is highly versatile: you can apply it to explore markets you’re considering entering, test if early product concepts are valuable, evaluate features, and drive customer-centered pricing and sales conversations.

Read more here to learn how to scope your customer learning goals, plan for and lead customer conversations, as well as turn findings into insights to serve decision-making.

Would you like to workshop on your team’s specific goals using the Customer Understanding Toolkit? We’d love to collaborate! Reach out at hello@sendfull.com

That’s a wrap 🌯 . More human-computer interaction news from Sendfull next week.