ep. 76. A Parking Meter's Quiet AI Design Wisdom

5 min read

Have you ever tried to feed a ticket to a parking machine, only to have it returned back to you, despite being sure you inserted it right-side up?

This was my experience last Saturday, after my partner and I had spent the day in my hometown of Toronto. We parked in a covered lot along the Queens Quay waterfront in one of the city’s many municipal “Green P” lots.

After inspecting the ticket and feeding it into the machine in every possible orientation, I turned my attention to the prominent “help” button to my right.

I dreaded pressing it.

I imagined a never ending ring tone. Or waiting for a person to come down to the machine and troubleshoot. Whatever awaited for me on the other end of that button probably wasn’t going to be a good user experience.

To my surprise, almost immediately after pressing the button, a human representative picked up. I read him my ticket number, and an accurate total popped up on the screen. After concluding our interaction, I continued with payment on my own. Moments later, we were on our way.

This mundane task of paying parking struck me, not only because such a bland - and potentially stressful - interaction became delightful, but because it was human-in-the-loop design done well.

Compare this experience with the generative-AI-driven customer service horror stories of over-automating at the expense of delivering user value.

Take Klarna, the Swedish fintech company.

In February 2024, CEO Sebastian Siemiatkowski revealed that the company’s Open-AI-powered customer service assistant was managing 2.3 million conversations each month, covering two-thirds of all service interactions - and replacing the work of 700 full-time agents.

“I want Klarna to be your favorite guinea pig.” - Sebastian Siemiatkowski, Klarna CEO, to Sam Altman, OpenAI CEO.

The metrics looked convincing: average resolution times dropped from 11 minutes to under two, and the company forecasted $40 million in annual profit gains.

However, the customers’ reality was different. The UX was suffering.

For example, response times involved a frustrating 15 to 20 seconds of awkward silence between messages. When customers raised concerns about financial hardship, such as asking, “What if I can’t make a payment on time?”, the AI replied with flat, generic language, offering no recognition of the stress or difficulty behind the question.

By late 2024, Siemiatkowski publicly acknowledged that the company had overextended its use of AI in customer service. By May 2025, Klarna was bringing back human customer service representatives.

Klarna had succumbed to the full automation fallacy: the mistaken belief that all human tasks can - or should - be entirely replaced by automated systems.

Still in the Loop

What if Klarna had sequenced its automation from the start? (My forthcoming book will cover how to do this!) They could have begun with frontline automation while handing off complex or sensitive queries to human operators. As the technology matured, it could then take on a wider range of requests.

In other words, Klarna would have benefitted from a handoff similar to the Green P machine: if the automated system couldn’t resolve your issue, you’d press the software equivalent of a “help” button, or be seamlessly routed to a human whenever the AI hit its limits.

Once technology capabilities align with high-value opportunities, then it makes sense to expand automation. We saw this in action with Air Canada’s fully automated bag check - another example of a smooth, well-designed experience.

Or this little robot lawnmower at the Halifax Citadel. While I’m no expert in lawn care, it seemed to be doing a thorough job.

You may rightly point out that the problem spaces of Green P, the Air Canada bag check, and the little lawn robot are highly bounded - there are only so many things that will go wrong with your parking ticket. Compare that to the complexity of customer service requests received by a large company.

Nonetheless, the design lesson holds: whenever we see automation done well, we should ask what it can teach us about the automation challenges in our own domain, especially as we move towards agentic workflows.

Takeaways

Turn moments of friction into moments of trust: The Green P machine turned a stressful moment into delight - and ultimately trust - through a seamless human handoff that picked up where automation left off.

Beware of the full automation fallacy: As the Klarna case study shows, defaulting to full workflow automation risks frustrating customers and undermining trust when systems can’t adapt to human needs.

Automate with humans at the center: Sequence automation with intention, asking not only “can we?” but “should we?”, aligning tech capabilities with high-value customer opportunities.

Further Reading

Working on agentic workflows? I’d love to interview you for my upcoming book - reach out at stef@sendfull.com

Human-Computer Interaction News

95% of organizations are getting zero ROI on generative AI - MIT Nanda

AI robots are helping South Korea’s seniors feel less alone - Rest of World

Extended Reality coming of age at the Venice film festival - The Guardian

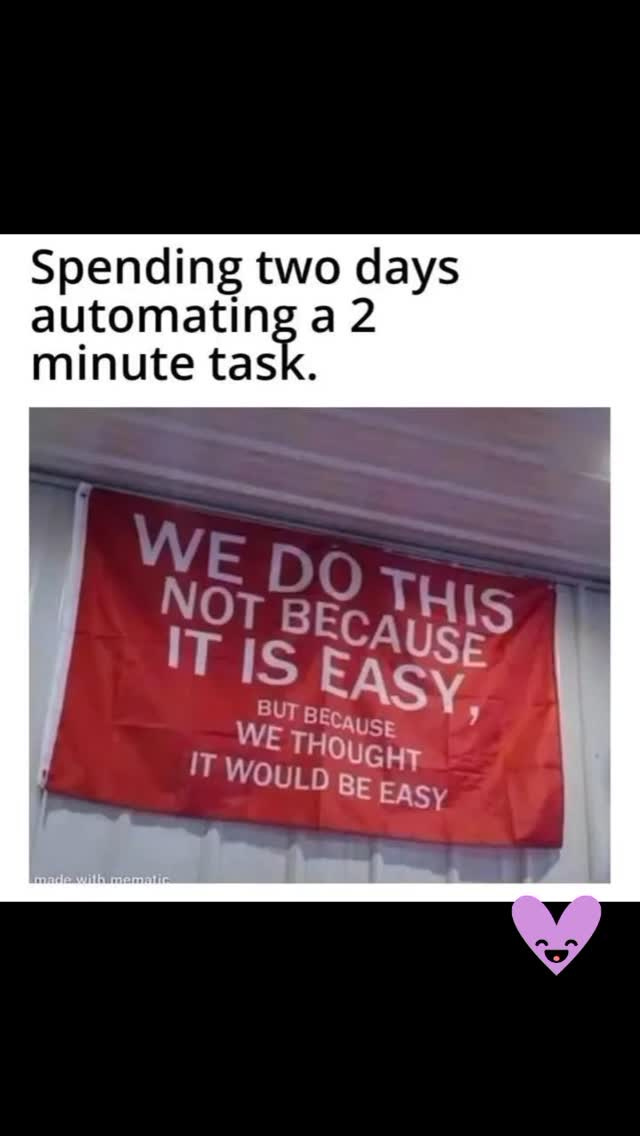

Bonus: Automation on socials 👇🏼

That’s a wrap 🌯 . More human-computer interaction news from Sendfull in two weeks!