I keep coming back to Andrej Karpathy’s talk, Software Is Changing (Again).

It’s one of the most clear-eyed takes on how to approach designing LLM apps. He describes LLMs as “people spirits”, stochastic simulations of humans with a jagged skillset - amazing encyclopedic knowledge, but subject to anterograde amnesia, gullibility, and hallucinations.

Building fully autonomous LLM apps means we’re at the mercy of this jagged skillset, cognitive deficits included.

Instead, he urges us to build partially autonomous apps - augmentations that enhance our capabilities while keeping us in control. Think Iron Man suits, not Iron Man robots.

“Less Iron Man robots, more Iron Man suits.” – Andrej Karpathy, Founding Member, OpenAI

Less than two months after Karpathy’s talk, GPT-5 arrived with fewer hallucinations and better memory (read: less anterograde amnesia) than its predecessors. The jagged skillset is already starting to smooth out.

Given the scale of investment in AGI (10× the Manhattan Project), we’ll likely see continued improvements in LLMs’ cognitive deficits. But faster progress doesn’t change Karpathy’s core principle. Just because we can build Iron Man robots doesn’t mean we should.

Automating with Humans at the Center

How do we automate with humans at the center, especially as technical capabilities continue to evolve?

This is the question we explored in the session I led at UC Berkeley on Monday for the School of Information’s Master of Information and Data Science (MIDS) program, part of the annual MIDS Immersion conference.

The room was packed with students who are also working full-time in industry, many already experimenting with building and adopting agentic workflows in their organizations. This made for a rich conversation about the live challenges and opportunities of AI automation.

Takeaways

1. Generative AI is rewriting the product development playbook.

Generative AI is “the exact opposite of a solution in search of a problem. It's the solution to far more problems than its developers even knew existed.” - Paul Graham, co-founder, Y-Combinator

Generative AI is a solution unlocking new problem spaces.

Unlike other disruptive innovations that share this core dynamic (e.g., smartphones unlocked a market for rideshare apps), generative AI is unique for three reasons:

Unprecedented growth (see: 100 million ChatGPT users in <2 months)

Breadth of its impacts (name one business that isn’t affected)

Stochastic nature (read: unpredictable output)

The result? Product-market fit is becoming model-user fit.

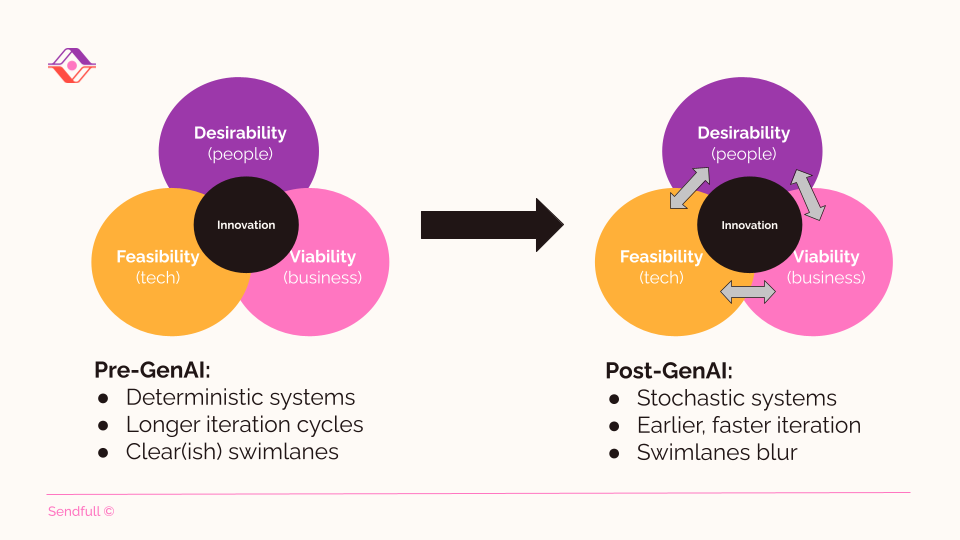

Model decisions, such as the training data, directly shape the UX. Unlike deterministic systems, generative AI’s stochastic nature allows earlier, faster iteration. Gone are the days of requirements > build > test > iterate over weeks; now teams work live with models, prompting, observing, and tweaking in real time. This accelerated pace quickly raises expectations for how fast work should move.

When some functions (e.g., engineering) adopt generative AI tools while others (e.g., design) don’t at the same rate, the faster team sets the pace. The slower team becomes the bottleneck, not because their work is less valuable, but because their process can’t match the new speed.

It’s a hot mess, made hotter by top-down market pressures to AI-ify in the name of cost-cutting and staying competitive.

I used to describe developing extended reality products, like augmented reality headsets, as “building the plane while we’re flying it.” With generative AI, it’s more like building a hypersonic jet while everyone’s holding a different screwdriver, and one of them might suddenly turn into a spoon.

2. Organizational transformation is hard. Generative AI makes it harder.

The shift to the generative AI product development cycle is an example of organizational transformation - something that was hard even before we all decided we were building in-flight hypersonic jets.

McKinsey has tracked organizational transformations for years. In December 2021 BGI (Before Generative AI), they reported that 70% of large-scale transformations fail - a number that’s held steady for 15 years.

“The 30 percent success rate hasn’t budged after many years of research, and we now know that even successful transformations still leave value on the table”. - McKinsey & Co

Now layer on agentic workflows.

Leadership often operates from the full automation fallacy, the belief that all human tasks can or should be entirely replaced by automated systems. Meanwhile, teams on the ground often see why that’s not always the right move.

In my session’s icebreaker, participants quickly identified what they would automate instantly (e.g., data fusion across wearables for nuanced rest/workout predictions) and what they’d never automate (e.g., tasks that bring “joy, fulfillment, and accomplishment”).

This alignment gap is further compounded by uncertainty about technical capabilities, with no shared language for communicating opportunities and risks. The result?

4% of companies generate substantial value from generative AI (BCG, 2024)

1 in 4 CEOs report AI initiatives have met ROI expectations (IBM 2025)

Headlines like “Klarna reverses on AI, Says Customers Like Talking to People” (Forbes 2025) and “260 McNuggets? McDonald’s Ends AI Drive-Through Tests Amid Errors” (NYT, 2024)

3. A shared framework helps us prioritize, align, and sequence.

In the workshop, we used a framework from my forthcoming book to rethink customer service automation at Klarna, balancing should we? (value) with could we? (feasibility). I’ll save the full framework for the book, but here are three insights we discussed:

Map the ecosystem, not just the end user.

When we say “let’s automate customer service”, we’re touching all of the props, people, and processes that make it work. Do we know what all of those are? If you did somehow fully automate them, what value would that deliver, and to whom? What else might shift, like customer expectations and handoffs?

Service design offers great tools here. For instance, service blueprints to visualize how props, people, and processes work together, and a stakeholder map to surface hidden risks and opportunities.

Taking a holistic view of value is essential because value is a key input when deciding what to automate.

Facilitating cross-functional knowledge sharing is an underrated superpower.

Influence isn’t only about direct decisions. It’s also about connecting the dots across teams. Facilitate conversations that:

Surface institutional knowledge (and assumptions!) about customers

Build shared intuition about technology capabilities

Use a shared framework to help people align

If you’re a workshop wizard who knows customers inside out but not the tech, partner with someone who does. If you know the tech but haven’t facilitated, team up with someone who has (chances are, they know your customers, too). These partnerships help build stronger bridges, faster, and we need every bridge we can get.

Embrace levels of partial autonomy.

To automate in a way that delivers value, we need to decide whether a task calls for humans to be in the loop, actively making key decisions, or on the loop, monitoring and stepping in only when needed.

The choice depends on variables like use case, risk tolerance, and customer expectations. In high-stakes or ambiguous scenarios, being in the loop ensures human judgment guides the outcome; in lower-risk, repeatable tasks, being on the loop frees people for higher-level work.

Designing for these modes, and knowing when to transition between them, is what building Iron Man suits is all about.

tl;dr

As generative AI’s capabilities sharpen, the challenge isn’t just what we could automate - it’s what we should. And we should only when it augments our capabilities while keeping us in control.

Further Reading

Working on agentic workflows? I’d love to interview you for my upcoming book - reach out at stef@sendfull.com

Human-Computer Interaction News

The World’s First Icons to Show Human–AI Collaboration: Developed by the Dubai Future Foundation, the Human–Machine Collaboration Icons offer a new global standard for transparency, indicating the extent to which humans and AI worked together throughout the creation process. Mandatory for the Dubai government, voluntary for everyone else.

An AI Social Coach for People with Autism: “Noora” is a chatbot that helps people with autism practice social skills.

A taxonomy of LLM hallucinations: The taxonomy is neat, but what stood out was the author’s point about the “inherent inevitability [of hallucinations] in computable LLMs, irrespective of architecture or training”. Maybe the skillset will be jagged for a while. Another +1 for Iron Man suits.

That’s a wrap 🌯 . More human-computer interaction news from Sendfull in two weeks!