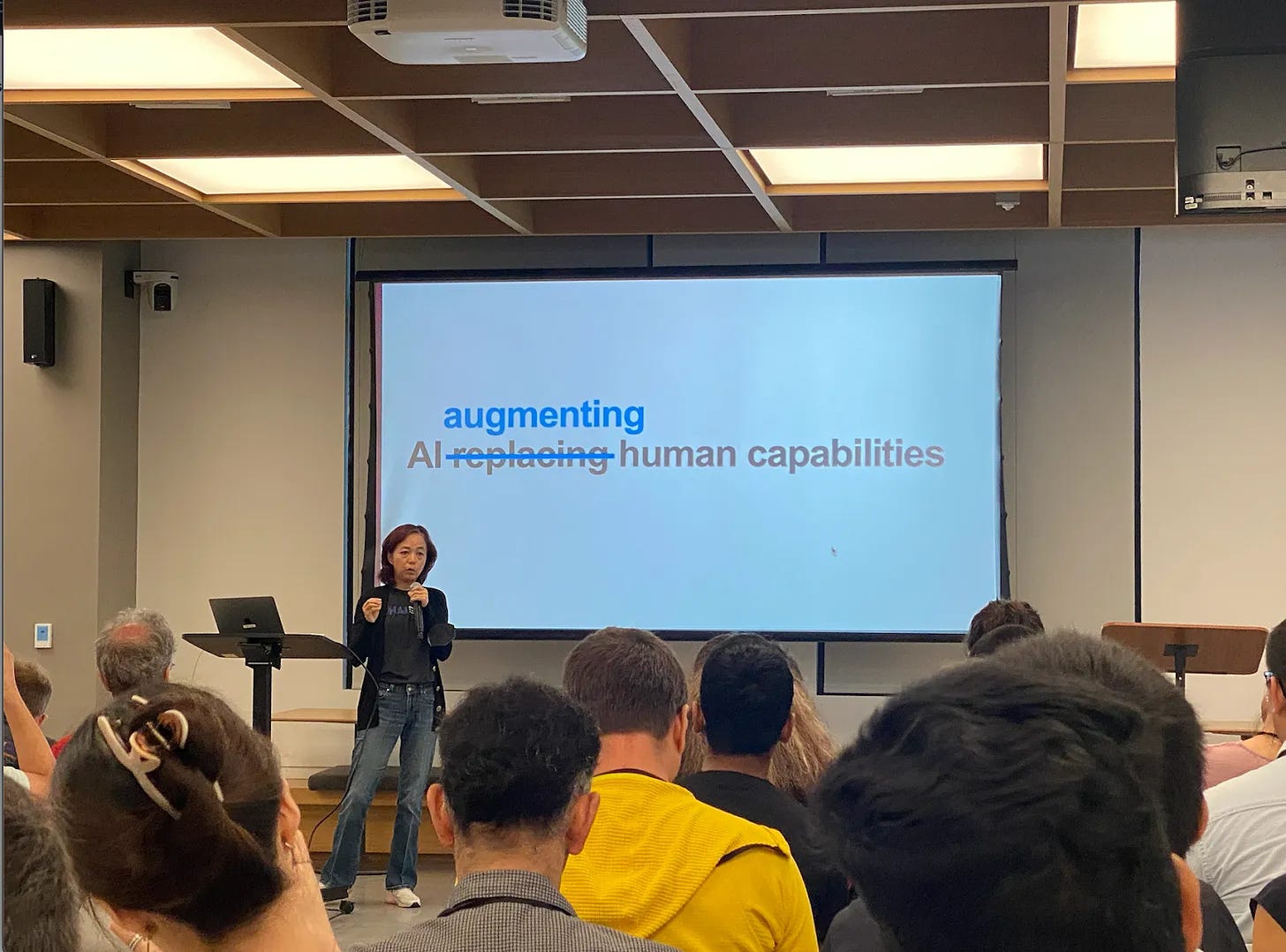

“AI must augment, not replace, human capabilities”.

If you work in tech, you’ve likely heard this phrase before. I first remember hearing it from Fei-Fei Li, godmother of AI, at the BayLearn ML Symposium back in October 2023. It’s become something of a rallying cry for those building human-centered AI - but what does it actually mean in practice? Why should product teams care? And what can computing history teach us about the present and future of augmenting human capabilities? Today’s episode unpacks these questions, and shares takeaways for product teams figuring out what AI tools to build.

What Augmentation Looks Like in Practice

In her BayLearn keynote, Professor Li walked through the evolutionary history of vision - from its origins in simple organisms 540 million years ago to its foundational role in human intelligence. Vision, she argued, didn’t just help us perceive the world - it enabled us to act on it, forming the basis of more complex cognition.

She used this framing to explain how her lab applies neuroscience and cognitive science to build visual AI systems. Their work includes exploring how machines can replicate human visual processes - and where they fall short. One example she discussed is change blindness, where people fail to notice significant visual changes in their environment. You’ve probably seen the example: someone in a gorilla suit walks through a basketball game and no one notices.

This gap in human visual capabilities presents a meaningful opportunity for AI to step in - not to replace us, but to extend our capabilities. Healthcare is one clear domain where this matters. Medical errors have been reported as the third leading cause of death in America. Making matters worse, we’re facing a human labor shortage - for example, we would need 1.2 million new registered nurses in America by 2030 to address the current shortage. More eyes are needed to keep patients safe - especially eyes that can catch what humans might miss.

Imagine designing a system that used electronic sensors and AI to help medical professionals monitor and treat patients. The system would be designed not only to improve patient outcomes, but also protect their privacy (e.g., via facial anonymization). That’s augmentation: addressing a gap in human capabilities, with clear human-AI complementarity.

Why It Matters for Product Teams

For product teams creating AI systems, “building AI to augment human capabilities” isn’t just a philosophical stance - it’s practical. When AI augments rather than replaces human capability, we create solutions are easier to adopt, more trustworthy, and more aligned with real needs. What does this look like in your metrics? Retention, engagement, customer satisfaction, and long-term ROI.

Building tools that miss the mark is also expensive. Historically, 80% of product features are never used, costing $29.5 billion in investment that could have been directed toward more impactful features. AI compounds this risk: if you ship a system users can’t understand, don’t trust, or don’t need, the cost isn’t just lost time - it’s reputational risk and reduced ROI.

Learning from Computing History to Look Ahead

Today’s episode didn’t stem from the latest paper on AI and cognitive offloading. It came from looking at computing history, while preparing a talk on multisensory design for XR systems for XRLab Berkeley.

In that talk, I revisit Vannevar Bush’s 1945 As We May Think essay in the Atlantic. Bush presented the concept of the memex, a system to extend human memory. This vision would go on to influence the development of the World Wide Web and personal computers.

The memex also inspired Douglas Engelbart’s work at Stanford Research Institute (SRI), which focused on “augmenting human intellect”. His team’s work would yield innovations like the computer mouse and graphical user interfaces (GUIs), culminating in the “Mother of All Demos” in 1968.

These early systems weren’t designed to automate away human thought. They were built to enhance it. Remember, memex stood for memory extension. The center Engelbart founded at SRI was called the Augmentation Research Center. People building technology have been aiming to augment rather than replace, human capabilities for a long time. AI is a natural extension of that, albeit happening at a faster pace than we’ve seen in the history of technology.

We’re also at an inflection point in the move toward more embodied interfaces, between AI-enabled robotics and the rise of spatial computing. Augmentation is moving away from flat GUIs to more intuitive interactions with the physical world. We’re already seeing evidence of this shift in the combination of smart glasses with generative AI capabilities, such as Ray-Ban Meta 2 and Frame by Brilliant Labs. We will need to start asking ourselves if, when, and how we can augment human capabilities with AI - not just in digital spaces, but in the physical world around us.

Takeaways

Define the augmentation opportunity. Ask: What are people already doing well? Where do they struggle? Design AI tools to help fill those gaps. Use the Cognitive Offloading Matrix for guidance.

Prioritize interpretability and control. Augmenting human capabilities means helping people act with more confidence - not taking decisions away from them.

Design for complementarity, not substitution. Build systems that extend human strengths and compensate for gaps. AI should be a helpful partner, rather than a tool that makes us obsolete.

Look beyond automation metrics. Value doesn’t always mean time saved. Sometimes it’s about error prevention, better decisions, or improved experience.

Learn from the history of computing and human-centered design. Many challenges we face today with AI, such the potential for automation over-reliance, have been explored in other contexts in HCI and human factors. Learning from this history can help inform how we build for the future.

Sendfull in the Wild

May 7: EPIC People Tutorial

I’m excited to be teaching a tutorial with EPIC People on Designing AI to Think with Us, Not for Us: A Guide to Cognitive Offloading, happening May 7. Join me for this half-day course to learn strategic tools and cognitive science expertise you need to drive insights, design, and decision making that empowers people and builds trust.

Everyone is welcome to register and EPIC members get $50 off: https://www.epicpeople.org/project/5725-designing-ai-cognitive-offloading/

May 21: UpScale Conf Panel

I’ll be joining AI x Design leaders Brooke Hopper and David Montero for a discussion on how you design the future when the future doesn’t exist yet. Get your tickets here: www.upscaleconf.com/#section-get-tickets

Human-Computer Interaction News

Anthropic Education Report: STEM students, particularly in Computer Science, are early and heavy adopters of AI tools. Overall, students primarily used AI for higher-order thinking skills like creating (using information to create something new) and analyzing (taking apart the known and identifying relationships), such as creating coding projects or analyzing law concepts. This usage raises questions about ensuring students don’t offload critical cognitive tasks to AI systems.

Adapting Marketing Strategies for AI-Driven Discovery: Bain & Co reports how Generative AI is changing how consumers find and evaluate products, often bypassing traditional marketing touchpoints through zero-click journeys.

Stanford’s AI Index 2025: The Stanford Institute for Human-Centered Artificial Intelligence shared a report on the state of AI in 10 charts. Insights that we might not hear as much about: Health AI flooding the FDA, Asia showing more AI optimism, and smaller models getting better.

Designing emerging technology products? Sendfull can help you find product-market fit. Reach out at hello@sendfull.com

That’s a wrap 🌯 . More human-computer interaction news from Sendfull in two weeks!