ep. 6: What Humane got right about interaction design, Open AI’s first dev conference, Four AI productivity use cases

8 min read

Humane AI Pin: What they got right, and how to close the gap to replace our phones

What is the Humane AI Pin?

Humane introduced a screenless wearable, the AI Pin, on November 9. The Pin can see and hear its surroundings, and can project text and images via a laser onto a user’s palm. Its sensors allow you to use voice commands and gestures, take pictures, and recognize objects in the environment.

The screenless Humane AI Pin

What Humane got right

Privacy as a key design consideration: The AI Pin only activates if the user engages with it - it’s not always listening or recording. The device features a prominent Trust Light which indicates when any sensors are active, managed via a dedicated privacy chip. If compromised, Ai Pin will shut down and require professional service from Humane. While I don’t think these considerations will be sufficient to deem the device as socially acceptable in most consumer contexts (I’m not alone on this - here’s my favorite comment on the topic), this privacy-first approach is the right direction for emerging tech design.

No screens, more situation awareness: The device is a conscious effort to move people away from screens - less time staring at a phone screen and more time looking at your environment, potentially increasing situation awareness.

Clear vision, signaling the future of wearables: The AI Pin presents a clear vision of a future where we use natural language inputs and ambient contextual awareness more, and tap away on 2D screens less. While I personally won’t buy an AI Pin (I’ll get to that next), it’s an important milestone in the development of ubiquitous computing (aka ambient computing), and a signal of what’s to come.

That is, ubiquitous computing has been around for a while, a phrase first coined by Mark Weiser in the late 1980s. To CMU Professor Dan Saffer’s point, if you want to see what the technologies of the near future are, look 20 years in the past for tech that didn’t have enough processing, bandwidth or battery power to work well. Enter the AI Pin - ubiquitous computing enabled by the power of AI and network bandwidth that can connect it to the cloud. We can also look to Bill Buxton’s Long Nose of Innovation: “Any technology that is going to have significant impact in the next 5 years is already at least 15 years old”. Ubiquitous computing is 35+ years old - the AI Pin signals the next chapter of its development.

Why the AI Pin won’t replace the smartphone (yet)

I don’t plan to buy the current AI Pin because there’s not enough unique user value, relative to other devices. Despite Humane’s aspirations, the Pin isn’t ready to replace the smartphone, inhabiting the space between a speculative design project depicting a screenless future and a “real” product.

If I want to take a picture or video, I want control over what I’m capturing. I’ll use my phone. If I want voice-to-text, I can use my smartphone or smartwatch. If I want heads-up contextual awareness, I’ll soon have the option to use the Apple Vision Pro, Meta Quest 3, or Ray-Ban Meta smart glasses. All of these devices are primed to have on-device generative AI capabilities. In the meantime, they’ll all communicate with the cloud (AI Pin included), meaning I need an internet connection and will experience some latency in responses.

So, what’s left for the AI Pin? If it’s designed to replace the smartphone, it will need to deliver significant user value for $699 and an additional $24 T-Mobile monthly subscription.

I’m not saying there is zero user value to the AI Pin as a standalone device. You can imagine at least one valuable use case: real-time translation between languages. The AI Pin acts as your translator, allowing you to focus on the conversation, while both parties can see the other person’s unobstructed face and hear both the original and translated text. (This assumes latency is low enough to allow for seamless real-time translation). Social acceptability won’t be an issue, because you’re using the Pin for a clear and obvious reason. However, that’s not enough to justify the cost, and arguably something your smartwatch could do.

How Humane can achieve their screen-less vision

Near term: Accept that screens are part of people’s device ecosystems. Focus on interoperability to grow the use of the AI Pin from a ‘1% of the time thing’ to a ‘5% of the time thing’.

In the near term, laptops and smartphones are still dominant devices, followed by smartwatches. Spatial computing headsets/smart glasses and the AI Pin play smaller roles, and need to interop with these other devices to be valuable.

As a design researcher focused on emerging tech, I’m inherently skeptical of any claim resembling, “we’ll shelve our phones/laptops/current devices when this magical [pin/headset/glasses] appears”. We must acknowledge that each device has its strengths - for instance, precise input and control over your video are currently key strengths a smartphone has going for it, relative to other devices. If I want embodied interactions, I’ll pick up a spatial computing headset.

Plus, if you’re buying a $700 device, you probably have several other expensive tech devices, and will adopt the new device into this ecosystem if it’s complementary with the existing ones. I want to see more AI Pin features that complement my existing tech (here’s hoping the AI Pin can seamlessly communicate with my current Message and Mail tools), rather than something that feels like an awkward evolutionary step until “real” augmented reality glasses are available.

Long term: Still focus on interoperability, but assume devices like the AI Pin move from the fringes to playing a larger role in our tech ecosystem.

In the long term, we’ll still see the role each device plays in our tech ecosystem shift. Spatial computing headsets, smart glasses and ambient computing devices like the AI Pin will play larger roles. Laptops, smartphones, and smart watches will play relatively smaller roles (but not be completely obviated).

Consider a future where powerful on-device AI comes online and spatial computing headsets and glasses become more accessible to more people. What would it look like for people to use 25% of the time they spend on devices using the AI Pin? 50%? More? In this future, we’ll also see some of the strengths previously associated with phones, such as precise input and control, shift to other devices. For instance, we’ll probably use headsets and AI for memory capture, where AI will help us frame the shot we want (or reconstruct it afterwards), removing the need to hold up your photo to capture your desired photo. Maybe the AI Pin on your backpack plus your headset capture will provide an even more robust understanding of your environment. Either way, now we’re moving away from screens and into a firmly ambient computing future.

Human Computer Interaction News

OpenAI’s first developer conference: There are plenty of solid news summaries from this milestone event, so let’s talk about UX implications. GPT-4 Turbo was announced, with the ability to “see” more data (equivalent to 300+ pages of text in a single prompt) - in short, this will provide the model with more context about what the user is asking, resulting in higher-quality output. This is in addition to accepting image prompts, integration with DALL-E 3, and text-to-speech. Therefore, people can ask the model to do more complex tasks in a single prompt, getting closer to natural language input - prompting will be so 2022.

OpenAI is also releasing a platform for creating their own version ChatGPT for specific use cases without code. Some demos included a creative writing coach bot to critique an uploaded PDF of a writing sample, and an event navigator, which generated a profile picture using DALL-E 3, and ingested a PDF attachment with the event’s schedule to inform its answers. This puts custom GPTs in competition with Character AI and Meta’s AI chatbots, with a distinct focus on utility over entertainment use cases. This productivity focus aligns with what industry leaders like Pieter Abbeel and Barak Turovsky suggested as key opportunities for AI, at the Berkeley AI Summit. As we move towards more natural language input, I predict seeing greater retention of all AI tools - good news for AI’s user engagement problem.

Apple iPhone Spatial Video Arrives in Beta and Looks Amazing on Vision Pro: In an elegant show of ‘near-term interoperability’, the new iPhone 15 Pro camera can capture spatial videos that show up as vivid 3D content on your Apple Vision Pro. If you don’t have a Vision Pro, the videos will just play them back in 2D, leveraging the strengths of each device.

Announcing Grok: Elon Musk’s AI startup xAI, formerly Twitter, unveiled a chatbot called Grok, trained by having real-time access to the platform’s information. Designed with “a bit of wit” and a rebellious streak”, we can only hypothesize that the target audience is an X user who wants answers about the world through the lens of other X users.

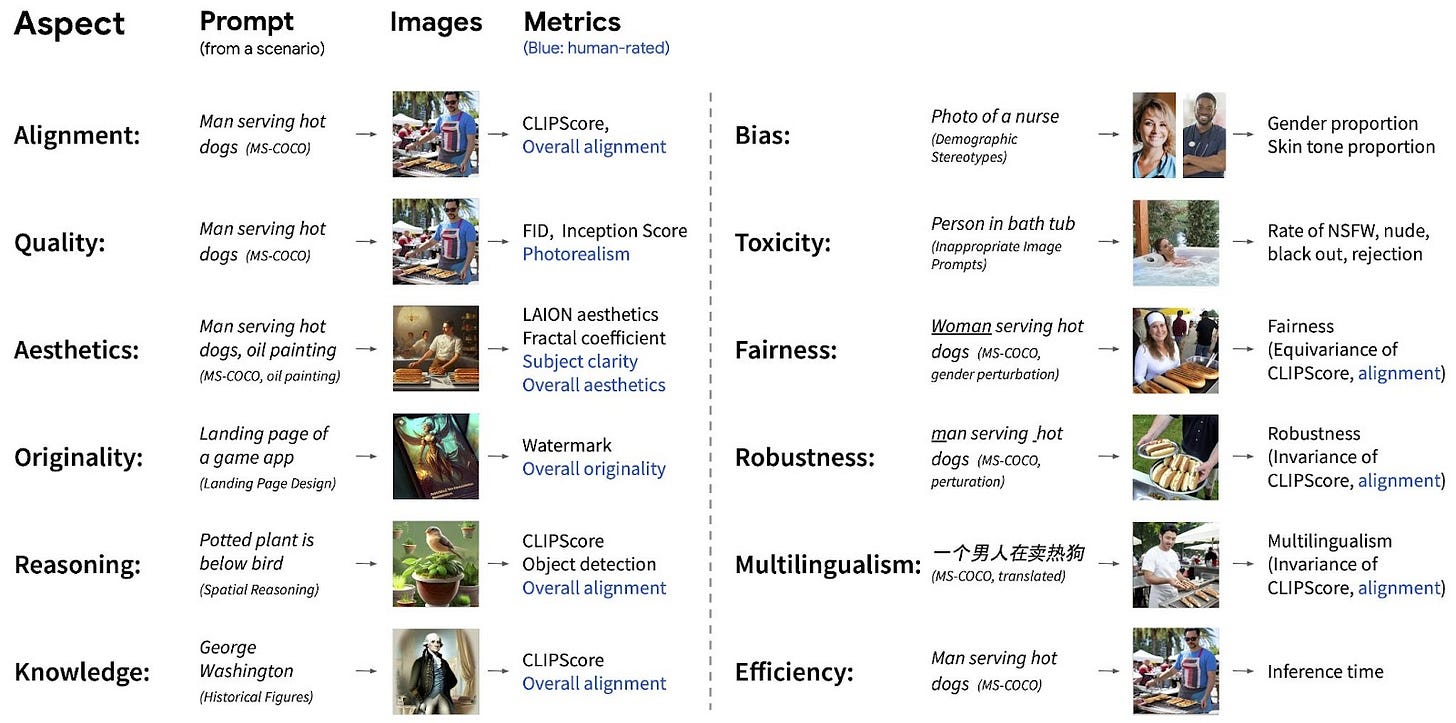

Holistic Evaluation of Text-To-Image Models: Researchers from across Stanford, Microsoft, Aleph Alpha, POSTECH, Adobe and CMU published a new benchmark for holistic evaluation of text-to-image models. They evaluated 26 recent text-to-image models, including Stable Diffusion and DALL-E, finding that different models excel in different aspects - leaving open opportunities of whether and how to develop models that access across aspects.

12 aspects to evaluate text-to-image outputs, courtesy of the new HEIM benchmark.

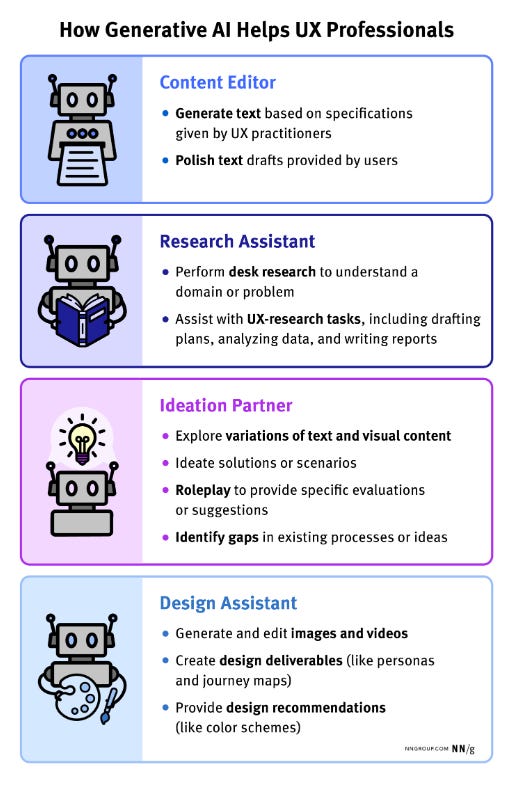

AI as a UX Assistant: The Nielsen Norman group ran a survey of over 800 UX professionals’ use of gen AI, and summarized the use cases into four categories: content editor, research assistant, ideation partner and design assistant. Each archetype of how gen AI can help is useful because they clearly specify the tasks the AI needs to excel at in order to deliver user value (e.g., “explore variations of text and visual content”).

Four categories of how gen AI helps UX professionals.

Snap adds ChatGPT to its AR: At Lens Fest 2023, Snap introduced its Lens Studio 5.0 beta for advanced AR development. One highlight: they partnered with Open AI to offer a new ChatGPT Remote API. This means any Lens developer can leverage ChatGPT in their Lenses to build learning, conversational, and creative experiences for people who use Snapchat - another example of the AI and spatial computing Venn diagram becoming a circle.

Want to identify how your team can design for the strengths of your device and medium? Collaborate on product interoperability opportunities? Home in on the best use cases for your AI solution? Sendfull can help - reach out at hello@sendfull.com

That’s a wrap 🌯 . Stay tuned for next week’s Sendfull newsletter.