We’ve all heard some version of, “we shouldn’t build technology for technology’s sake”, especially in the context of emerging technologies like generative AI. What does this actually mean, and why do we often build this way, despite knowing better?

This week, I’ll address this question, and propose a framework in which there’s a symbiotic interplay between problem and solution space from the early stages of product development. While this challenges the traditional design practice of starting exclusively in problem space, it’s necessary - we need an approach that accounts for the role solution space realistically plays as we build new products. This is especially true in the gen AI space, where solution space and problem space are advancing in parallel at unprecedented rates.

Problem versus solution space

This topic has been top-of-mind, as I teach my students about problem versus solution space. A quick refresher: Problem space is the conceptual landscape where we explore, understand and frame a particular problem we want to solve for people. This is the ideal starting place for a human-centered design process. A great example of this done well is the story of OXO’s Good Grips handles.

Sam Farber observed his wife Betsey having trouble holding her vegetable peeler due to arthritis. Sam saw a people problem - ordinary kitchen tools hurting your hands. How might we build tools that are comfortable to hold? Out of this problem space emerged the OXO Good Grips handles, which served not only people with arthritis, but people with different hand sizes and grip strength. OXO Good Grips remain a strong example of universal design.

In contrast to problem space, solution space is the range of possible approaches and design options available to address a particular people problem. What happens when we skip problem space, and start from solution space?

The Juicero personal cold-press juicer was a great example.

Launched in 2016 with a $699 price tag, the Juicero pressed proprietary packs of fruits and veggies into juice - all while internet-connected and mobile-app controlled. The company shuttered its doors after 16 months, but not before raising $120 million from investors, including high-profile firms like Google Ventures, and a positive profile in the New York Times. A key domino in Juicero’s downfall was a Bloomberg News report, namely that people could easily squeeze the packs with their hands at a rate faster than the machine. The Guardian shared a memorable quote: “Juicero has since become something of a symbol of the absurd Silicon Valley startup industry that raises huge sums of money for solutions to non-problems.”

The story of Juicero serves not only as a cautionary tale about starting in solution space, but also shows how jumping to a solution without addressing a clear people problem is often common in Silicon Valley. With the rise of gen AI, this has only been magnified - we have solution space growing at an unprecedented rate, and we’re often left in search of a use case after the fact.

One issue is the current incentive structure for quick wins over long-term thinking that’s grounded in a thorough discovery process. However, I’ve experienced first-hand that you can have a technology-driven innovation team that is genuinely invested in understanding people problems early, in parallel with solution development. As I’ve taught existing design frameworks to my students, I’ve noticed that we lack a framework that accounts for how problem and solution space can coexist and symbiotically inform each other during emerging product development.

Whether it’s the original or Design-of-Everyday-Things version of the Double Diamond, or the iterative cycle of human-centered design, we fail to acknowledge that in practice, a solution may already be in mind (or in development) at project outset. In the case of gen AI, this strict separation of problem and solution space is especially unhelpful. For example, the capabilities of a large language model (LLM) - aka the solution - are often known early on in a project. Realistically, you’re not starting from a blank slate, where you conduct needfinding, diverge and converge, and the idea to build an LLM results from the subsequent ideation process. More likely, problem and solution space are coexisting and informing each other much earlier on.

Why we need symbiosis between problem and solution space for gen AI

It’s becoming increasingly clear that gen AI is not just another technology. It is uniquely difficult to design for. However, the antidote to building AI solutions that lack clear use cases is not just “spend more time in problem space”, identifying people's problems untethered to an AI solution. Indeed, there are findings that needs uncovered in user research often point to issues where AI will not help. Furthermore, during the ideation stage, user-centered design brainstorming also does not work well when the solution must utilize gen AI. Instead, we need a clear understanding of AI’s capabilities because at present, AI is good, but not good enough for most things on its own (see: why you wouldn’t want to take a nap in a Tesla). We must accept that there is a connection between problem and solution space from the start of the development process, and ask, “What are the problems that we can solve for people by employing gen AI?”

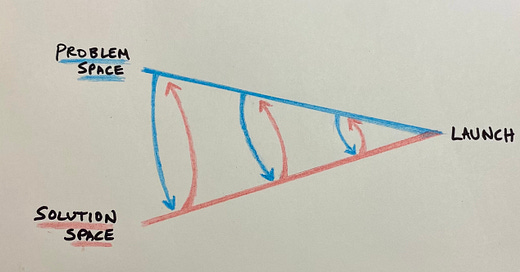

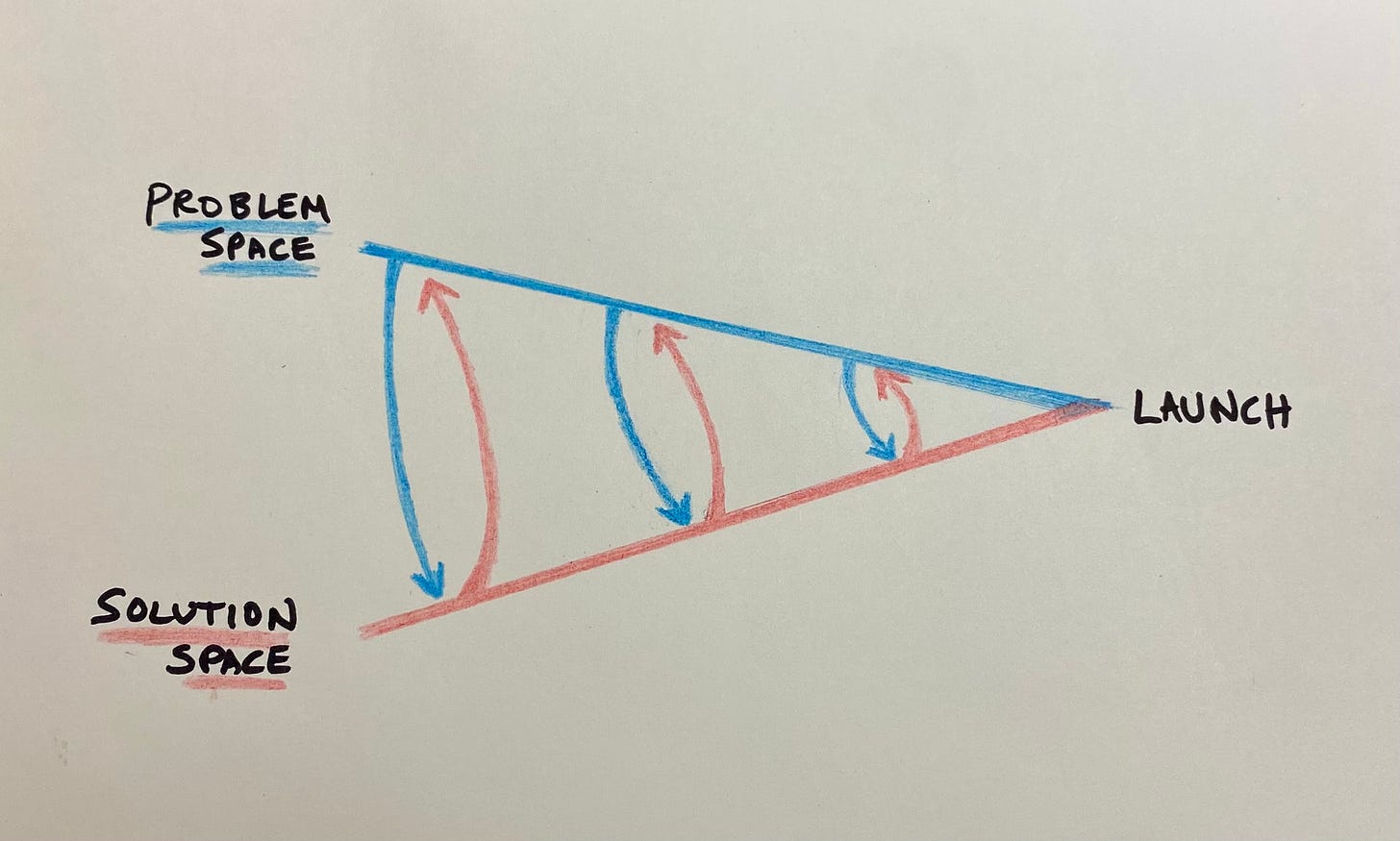

A framework built around this question begins with exploration of whether a given technology solution is possible in parallel with understanding user needs. The team starts to identify what problems can be solved for people using the solution. Iteration occurs as more is learned about user needs, and as technology develops, more opportunities to solve for user needs may emerge. As this symbiotic development progresses, you start to converge on a solution that effectively solves for user needs. I calling this the Problem-Solution Symbiosis framework.

This approach of examining problems that we can solve for people by employing AI is supported by recent human-computer interaction research from Carnegie Mellon University. Researchers set out with a goal of improving the process of envisioning AI products and services through more effective ideation. They hypothesized that this would reduce the risk of producing AI innovations that cannot be built or that do not address real human needs. They began by collecting AI examples and capabilities, synthesizing these down to a shortlist of capabilities, synonyms, definitions and examples.

Next, researchers ran two ideation workshops in which they employed different approaches, with the goal of producing ideas that were feasible and relevant for applications in a hospital intensive care unit (ICU). A team of clinicians and data scientists working to improve critical care medicine in the ICU were included in the workshops.

The first approach was your traditional, user-centered ideation workshop. Clinicians were encouraged to recall pain points and ideate on potential AI-enabled solutions. Data scientists expanded on whether training data existed and if a given concept could be built. This brainstorming approach did not account for what AI can do well versus not. The second approach started with an overview of the AI examples and capabilities. During ideation, clinicians were asked if they could think of situations where a given AI capability might be useful. Data science members elaborated on what might be feasible. The moderators pushed back on concepts that required near-perfect AI model performance, probing for situations where moderate model performance would still be valuable.

While both workshops facilitated ideation, the outcomes were different. Only the second workshop yielded high-impact concepts that were low-effort to build (that is, didn’t require near-perfect model accuracy). There was also a greater breadth of ideas relative to the other workshop. Output ideas included AI improving the coordination between clinicians (e.g., generating a schedule for nurses and respiratory therapists for extubation), or systems that improved logistics and resource allocation (e.g., predicting which medications would be needed based on current patients, and pre-ordering from the pharmacy).

In contrast, the first workshop yielded almost no concepts that were low-effort, and involved situations with high uncertainty where the task would be difficult even for trained experts. For example: using deep learning to help discover the right amount of sedation for a patient on a ventilator. Too little or too much sedation would have serious consequences for the patient, making this a very high risk use case.

Ideas were mapped on axes of impact to people and effort to build, as well as amount of task expertise required and AI performance required. We can see more ideas (blue stickies) generated in the low effort, high impact quadrant in (b); when re-mapped considering AI performance and required task expertise, there were many ideas were a fit for moderate levels of AI performance and required less task expertise.

What was different about the workshop that yielded the high-impact, lower-effort ideas?

Reviewing AI capabilities and examples - that is, the bounds of solution space - prior to ideation seemed to have a major impact on healthcare members. They repeatedly recognized situations where a capability could be useful, and then applied that to a healthcare opportunity, leading to more thorough mapping of the problem-opportunity space. There was also the moderators’ probing for workshop participants to consider ideas where moderate model performance would be helpful. It’s also notable that both people deeply familiar with people problems (in this, clinicians) and people deeply familiar with solutions (in this case, data scientists) engaged in the ideation process.

Takeaways

Matching problems we can solve for people with gen AI requires a symbiotic interplay of problem and solution space, represented by the problem-solution symbiosis framework. To begin implementing this framework, here are three things teams can do today:

Identify and define the key gen AI capabilities you’re considering, including example applications. For example, for the capability “identify”, the definition is “notice if a specific item or class of items shows up in a set of like items”. Examples include, “identify if a message is spam” or “identify if this is the user’s face”.

Before ideating about solutions, review these capabilities. Begin ideating, ensure you include people with deep knowledge of customer needs (e.g., design researchers) and deep knowledge of gen AI capabilities (e.g., machine learning engineers) in the process.

Map the ideas that are generated to axes consisting of ‘impact to the user’ and technical effort required (or, model accuracy level, if you prefer) to build. Prioritize ideas that require lower technical effort, leverage the technology’s strengths, and are grounded in observed user needs.

This approach can help us build gen AI solutions that are valuable to people (and feasible to build), rather than creating the gen AI equivalent of the Juicero.

Human Computer Interaction News

Meta Reality Labs Research makes real objects virtual so they can be interacted with in real time: Researchers have enabled the ability to digitize real-world objects and erase their physical counterparts. In a video making the rounds this past week due to a Road to VR post, we can see how these digitized objects are designed with coherence between the real and virtual world in mind, to increase immersion. When the user gets close to the real object, the digital version disappears, and the real object is displayed. Imagine a game where a character can scuttle off with a real object from your living room - great potential for mixed reality gaming and entertainment use cases.

Stanford’s Human-Centered Intelligence (HAI) Agency’s Davos 2024 roundup: AI implementation and risks were front and center at Davos this year. This article covers key insights from HAI’s vice director, James Landay, who participated in the 2024 World Economic Forum.

MIT research supports that AI will not be stealing jobs anytime soon: This paper caught headlines with the prediction that only 23% of wages linked to vision-related tasks could be feasibly cost-effectively replaced by AI.

Building gen AI technologies and looking to get crisp on use cases that leverage your product’s strengths? Sendfull can help. Reach out at hello@sendfull.com

That’s a wrap 🌯 . More human-computer interaction news from Sendfull next week.