ep. 10. Designing “key moments” for spatial computing: lessons from embodied cognition

8 min read

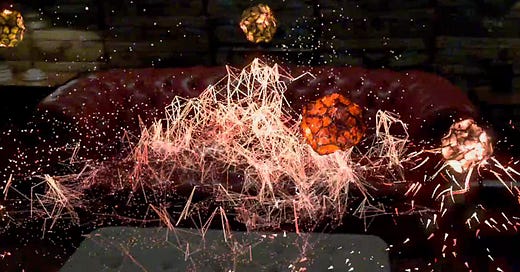

It was 2018, and I had just gotten my hands on a Magic Leap headset. My goal: try Tónandi, a new interactive audio-visual mixed reality (MR) experience by one of my favorite bands, Sigur Rós. I was immersed in a spatial landscape of sound and visuals (while still being able to see my physical environment), in which ethereal jellyfish-like beings responded to the touch of my hands, altering the music.

There was a key moment that I still remember, over five years later, where I’d swear I felt I touched one of these digital beings, despite there being no haptic interface.

What did Tónandi get right to create this compelling experience? How does this relate to Apple’s recently-shared spatial design insights? How can you design your own key moments for spatial computing? We’ll cover these topics in today’s newsletter, infusing a healthy dose of cognitive science to explain the “why” behind key moments.

What is a key moment?

Apple recently published their takeaways for designing for Vision Pro, synthesizing their conversations with developers at WWDC23. There are several helpful tips in the article for people building spatial experiences. For example, they offer pointers on how to design for comfort, such as: the more content is centered in the field of view, the more comfortable it is for your eyes.

However, what resonated most with me was their guidelines around identifying a potential “key moment” for your app: a feature or interaction that takes advantage of the unique capabilities of visionOS. Some examples of a key moment included: opening a panoramic photo and having the image wrap around your field of view. Or, if you’re building a writing app, creating a focus mode in which the user fully immerses themself in a spatial audio soundscape. These are all things you can’t do on a smartphone or a laptop.

I know from a decade of experience working in spatial computing that a key moment is not limited to leveraging the unique capabilities of visionOS - it’s about leveraging the unique capabilities of a spatial medium. That memorable experience in Tónandi was a key moment - the end result of effectively leveraging what I’ve called the “strengths” of spatial computing, relative to traditional 2D screens like those on smartphones and laptops.

What makes spatial content special, and what’s the recipe for a spatial key moment?

This is your brain on space

Interacting with spatial digital content is fundamentally different from how we interact with 2D digital content (e.g., on laptops or smartphones). To more deeply understand how to design key moments, let’s take a look at how our brains perceive space and objects within it.

Our brains represent space across several different “zones”:

personal space: space occupied by our body

peripersonal space: space immediately around our body that the brain encodes as perceived reaching and grasping distance

extrapersonal space: space beyond beyond reaching and grasping distance

Cognitive neuroscience research has shown how we can remap these zones via the use of physical objects (aka tools). For example, using a stick to interact with our environment, space previously considered extrapersonal space is remapped to peripersonal space. We can even remap how we represent our bodies in space. For example, the rubber hand illusion is a perceptual illusion in which participants perceive a physical, rubber model hand as part of their own body. This phenomenon can be replicated in virtual reality and MR using a digital model hand instead of a physical one.

These are all examples of embodied cognition: an approach to understanding our mental processes, which holds that the environment we sense and perceive with our bodies becomes built into and linked with our cognition. In other words, the objects we interact with become encoded in our brain.

“We shape our tools and thereafter our tools shape us” - Marshall McLuhan, 1964.

This is your brain on digital, spatial content

People quickly ascribe real-world properties to digital, spatial objects. That is, you see a digital volumetric “thing”, and you start treating it as you would if that thing were physical - something you could reach out and grasp, rotate, etc. The extent to which our brain “buys in” to this digital content being real (i.e., being “immersed”) can be modulated by factors like the extent to which you build a consistent metal model for your user (i.e., coherence).

Interacting with digital spatial content starts to remap our brain. Consider the “very long arm illusion”: research participants experienced ownership over a virtual arm experienced in a VR headset. This ownership persisted up to the virtual arm being three times (!) the length of their real arm.

Putting all of this together, spatial content creates a new layer of reality onto which we can map - and therefore, extend - our cognition. To do this, we employ a similar cognitive playbook as when we move through the physical world. This makes spatial, special, relative to non-spatial, 2D content.

Recipe for a key moment

How do we leverage special, spatial content to design for key moments?

Ingredients

Let’s start with the raw ingredients that make spatial experiences unique relative to 2D experiences on a smartphone or laptop:

“Real” spatial depth

We have years of experience navigating through space to interact with objects in the physical world. We naturally perceive the location, movement, and actions of our bodies (“proprioception”). This experience effortlessly maps to digital, spatial environments - Tónandi was no exception.

Let’s take the example of a task where I need to move a digital cube behind another cube. People are able to easily complete this task in-headset, manipulating the cubes similar to how you would move a physical wooden block behind another wooden block. In contrast, performing this same task on a 2D screen requires abstracting depth, such that you’re unable to naturally interact with content, even if it’s rendered as “3D”.

Key moment application: Think about 2D apps or features that would be made richer through physical manipulation - for instance, learning how the brain works, or how to assemble a piece of furniture.

Embodied interactions

Closely related to spatial input, our bodies can become the UI in spatial environments, mirroring how we move through the physical world. The ability to use my hands to interact with Tónandi’s digital content is an example of an embodied interaction.

Key moment application: For a given piece of spatial content, imagine it was a real object - how would you interact with it? Rotate it with your hand? Pull it in closer to inspect it? Leverage that interaction in your spatial experience.

Situation awareness

In MR (but not VR), you’re able to remain aware of your physical environment rather than being hunched over their phones or laptops. You can take this one step further by now blending digital and physical content in your environment while heads-up, allowing you to further immerse yourself in the experience - again, something you can’t do on 2D screens.

This ingredient is tricky because your digital content needs to “play nice” with the physical world (e.g., accurate occlusion) - otherwise, our brains will pick up on the mismatch, breaking immersion. The first Magic Leap headset did not excel at occluding fast-moving objects in real time. Tónandi’s ethereal, slow-moving content worked well given this constraint.

Key moment application: Consider how you can enrich people’s physical surroundings (being mindful of technology constraints), or help them perform real-world tasks better than they could without the headset (e.g., overlaying a sewing pattern on a garment).

Combine all the things

Now that we have the ingredients, how do you combine them to create a key moment?

Provide real-time feedback through multiple senses

When you move through the physical world using spatial input and embodied interactions, we get real-time feedback from an array of senses, such as visual, auditory, and haptic. Tónandi got this right through their combination of audio, visual and tactile inputs and responses: by touching the visual object, I altered the audio - all in real time.

Build for coherence

Coherence means that the mental model the user has built in the spatial computing environment is preserved throughout their in-headset experience. A lack of coherence breaks immersion, and can be hard to recover from. The world Tónandi built worked together, from how it blended with the physical environment (e.g., anchoring, occlusion), to aesthetic consistency within the digital experience.

Set the stage

Ease your user into the experience, not throwing them in the deep end of immersion all at once. Make sure they’re oriented in the experience before transporting them. For instance, starting off with spatial audio and having digital content sprout up in your physical environment, inviting the user to interact with it, as in Tónandi.

Putting these pieces together, you can leverage the strengths of spatial computing over 2D screens to create key moments that enhance your user’s experience and showcase the value of spatial computing.

Building apps for Apple Vision Pro? Sendfull can help you discover and build for your key moments. Reach out at hello@sendfull.com

Human Computer Interaction News

2024 predictions: a smart glasses turning point (AR Insider): 2024 will bring acceleration and meaningful steps forward for smart glasses. Current signals of this prediction included the launch of Ray Ban Meta Smart Glasses and Xreal Air 2 in Q4. The former included improvements to sound quality and mics compared to its predecessor, and alluded to future capabilities like AI-fueled object identification. Xreal Air 2 focused on a broadly-appealing use case: private media and gaming on large virtual displays.

Researching the usability of early generative-AI tools (Nielsen Norman Group): Some strategies to create relevant usability test tasks for gen AI tools include: asking participants to bring a task of their own (not previously tried on ChatGPT), leveraging participants’ screener responses (e.g., about text-based artifacts they worked on in their jobs, or what they had previously used generative-AI tools for), and leveraging ChatGPT to create scenarios and tasks.

Stability AI’s plan to counter AI’s Silicon Valley bias (Rest of World): Over the past year, Stability AI Japan has released several new products, including a language model, an image-to-text generator, and a text-to-image generator tailored to Japanese language and culture. In the words of Jerri Chi, head of Stability AI Japan, “It’d be dystopian if all AI systems had the values of a 35-year-old male in San Francisco.”

That’s a wrap 🌯 - for 2023! We’ll be back in January for 2023 reflections and 2024 predictions. Happy Holidays, everyone! ✨